2wai (pronounced “two-way”) is a consumer-facing mobile startup that lets people create and interact with lifelike “HoloAvatars” — AI-driven digital twins or avatars that look, speak and (the company says) remember like the person they represent.

The product is presented as a social app and “the human layer for AI”: users record short videos with a phone camera, upload them into the app, and within minutes can create an avatar they can chat with in real time and in many languages.

2wai is a commercially launched, mobile-first avatar social app that packages avatar creation, TTS/voice cloning, multilingual real-time chat and an avatar marketplace/social layer into one product.

2wai markets a “HoloAvatar” — a user-created digital twin you can converse with in real time.

The app claims users can create a digital twin in under ~3 minutes using only a phone camera and voice input.

Founders and backers in press coverage identify actor Calum Worthy and producer Russell Geyser among the people behind the company; the product launched publicly in 2025.

The release generated rapid controversy and criticism online — especially around creating avatars of deceased people and consent/ethics of lifelike clones.

Avatar creation workflow from smartphone video + audio (an “Avatar Studio” or “create your digital twin” flow).

Real-time conversational chat with avatars (voice chat — not just text). The site and App Store emphasize “real-time two-way conversations.”

Support for many languages (the company advertises automatic multi-language capability).

Avatars of celebrities, fictional characters and personal “digital twins” for creators/brands — i.e., a social network of avatars.

Key Features:

Capture & preprocessing

- Short video + audio capture, device-side preprocessing (face detection, basic landmark extraction, light and color normalization) before upload to reduce server work and bandwidth.

Why inferred: Most phone-based avatar creators do client-side checks and preproc to ensure consistent input.

Media upload + cloud storage

- Secure upload to scalable object storage (S3 or equivalent) and a job queue for processing.

Why inferred: Video and audio assets require storage and asynchronous processing.

Neural face / body reconstruction pipeline

- Neural rendering or parametric models (e.g., a blend of photogrammetry-lite, NVIDIA-style neural rendering, or lightweight 3D morphable models) to build a controllable 3D face/body rig from the short capture.

Why inferred: The marketing shows a full-body avatar and live lip sync — these require a mapping from video frames to an animated rig or neural renderer.

Speech / voice cloning

- Text-to-speech (TTS) and voice-cloning models (neural vocoders + speaker embeddings) to let the avatar talk in the user’s voice or synthesized voices.

Why inferred: The product claims the avatar “talks like you” and supports multi-language audio output.

Language & dialogue intelligence

- A conversational engine likely combines: (a) a large language model or dialogue manager for intent/response generation, (b) retrieval of user-provided memories/data for personalization, and (c) moderation/safety filters.

Why inferred: Real-time conversation with memory implies LLM-like context handling and personalization.

Lip sync and animation

- Real-time viseme mapping (phoneme→mouth shapes), facial expression blending, and body/gesture layers for realism. This can be model-based (neural lip-sync networks) or rule-based on phoneme timing.

Why inferred: Synchronizing speech to believable facial animation is required for convincing avatars.

Real-time delivery

- Low-latency streaming or on-device rendering with server inference for heavier steps; possible use of WebRTC or proprietary streaming for voice/animation sync.

Why inferred: “Real-time” chat implies latency optimization.

Privacy / ownership / content controls

- Account controls and data policies (the company claims users “own their digital self”), plus content moderation for celebrity/third-party likenesses. The exact implementation details are not public.

Technology:

Batch processing + GPU clusters for media-to-avatar transformations (these are compute-heavy).

Edge or on-device inference where possible for low-latency conversational response and rendering.

Data lifecycle & storage for user videos, voice prints, and avatar models — must include encryption at rest and clear retention policy.

Content moderation and consent workflows — necessary to prevent impersonation and to comply with platform rules and laws.

technology

- FedBrain™

- 2wai calls its core system “FedBrain™.”

- According to reporting, FedBrain runs “on-device” (or at least partly) to process interactions, which helps with privacy and lowers hallucinations.

- On the 2wai website, they emphasize “full-stack presence — voice, face, and identity” powered by this technology.

- In the App Store listing, they state avatars can “recall past information” and that FedBrain helps “manage access to pre-approved information.”

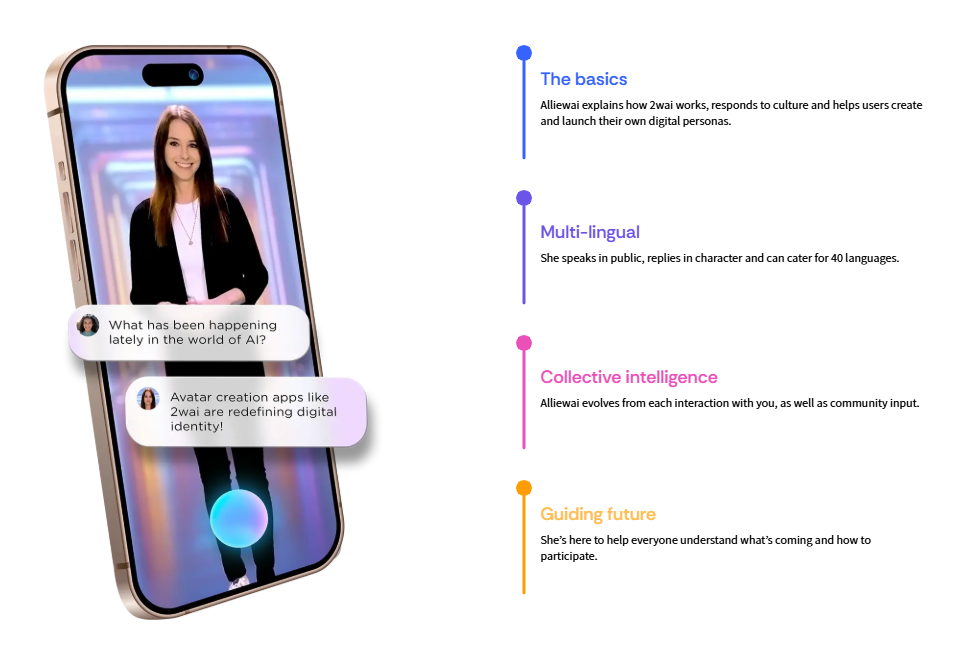

- Alliewai

- 2wai launched a “flagship” avatar called Alliewai, which they describe as a real-time, human-realistic AI agent.

- Alliewai is “powered by 2wai’s API and HoloAvatar technology.”

- According to 2wai, Alliewai supports over 40 languages.

- On-device / Lightweight Processing

- According to a 2wai interview / press, a “lightweight avatar solution is processed on a user’s device,” which gives “unlimited scalability and cost efficiencies.”

- This suggests that at least part of the inference (or avatar rendering / some model) happens on-device, not entirely in the cloud.

- Memory / Personalization

- 2wai’s “Avatar Studio” allows users to record voice memos and “journal” data, which the avatar (via FedBrain) can use as memory.

- This memory data is presumably used by FedBrain to inform responses so that the avatar “remembers” user-specific content.

- Verification / Identity Protection

- They note a “proprietary verification process” to protect digital likeness.

- The goal is to ensure that someone can’t hijack or impersonate another person’s likeness in 2wai’s system.

What is inferred or likely (technical models & AI components)

Because 2wai has not publicly shared a detailed technical whitepaper, we have to rely on inference to guess some of the AI models / system architecture they may be using. Here are the likely components and model types:

- Speech (TTS / Voice Cloning)

- To let avatars “talk like you” and in 40+ languages, they probably use voice-cloning / speaker embedding models + a TTS system.

- They may be using diffusion-based or neural codec models for speech generation (similar to recent TTS trends), though exact model (e.g., NaturalSpeech-style, Tacotron, VITS, or other) is unknown.

- Given on-device processing claims, they may use a smaller, quantized TTS or a lightweight neural vocoder on device, or use hybrid on-device + cloud.

- Language / Conversational Model

- To generate responses, 2wai likely uses a large language model (LLM) or a dialogue-specific LLM. This LLM would be combined with memory (the journal / voice data) to produce personalized conversational behavior.

- There may also be retrieval systems (to fetch user memory or pre-approved content) + safety / moderation filters.

- Avatar Rendering / Animation / Visual Synthesis

- To convert a selfie video into a “HoloAvatar,” 2wai needs an avatar generation / reconstruction pipeline: likely neural rendering or 3D morphable model + blend shapes + expression mapping.

- For lip-sync, they may be using a viseme-mapping model or a neural lip-sync network: you feed text or speech, and the system drives the avatar’s mouth / expressions.

- There might also be a motion / gesture model to animate body or head movements, though the public materials focus heavily on face and voice.

- On-device Inference / Edge AI

- Because 2wai claims “on-device” processing for avatars, they may use quantized models, model pruning, or distillation to make the model small enough to run on a phone (or at least parts of it).

- Some part of FedBrain likely runs locally (or hybrid), rather than purely in the cloud, for faster response and privacy.

- Memory / Context Model

- A memory system (“Avatar Memory Map,” as one report calls it) to track past conversations, user-provided voice memos / journal entries, and to feed that context into responses.

- This requires a storage + retrieval architecture: embeddings of memory, possibly a vector database, plus a policy / logic to decide what to bring into the conversational context.