The integration of Artificial Intelligence (AI) into mobility solutions is transforming the landscape of assistive technologies. One of the most promising innovations in this domain is the AI-powered autonomous wheelchair. These intelligent mobility aids combine robotics, computer vision, machine learning, and real-time localization systems to enable enhanced autonomy, safety, and user experience for individuals with mobility impairments.

Core Technologies That Make It Possible

- 🧭 SLAM (Simultaneous Localization and Mapping): Helps the wheelchair map and understand the layout of its environment in real time.

- 👀 Computer Vision: Cameras + AI = obstacle detection, sign recognition, facial recognition, and object tracking.

- 📡 Sensor Fusion: Combining LiDAR, GPS, IMUs, and ultrasonic sensors for accurate and redundant navigation.

- 🗣️ Human-Machine Interface (HMI): Voice, eye-tracking, touch, or even brain signals can control the chair.

- 🧠 Predictive AI: Learns the user’s routine and preferences over time to improve decision-making and automation.

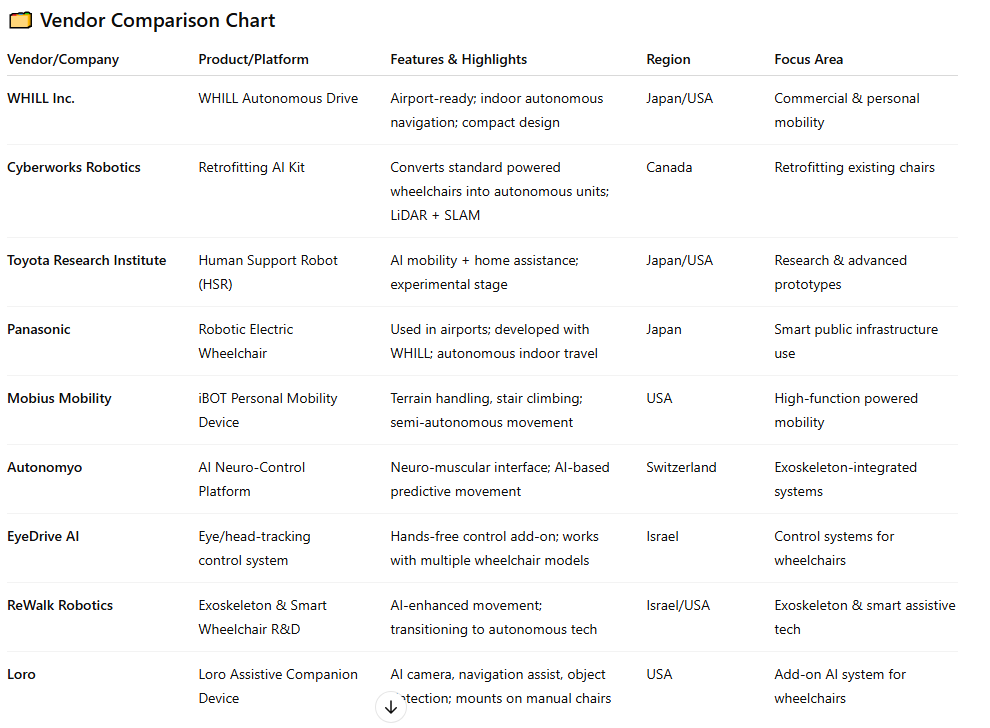

Research Institutions and Startups to Watch

MIT CSAIL (USA) – Advanced autonomous wheelchair research using computer vision and SLAM.

- Singapore-MIT Alliance for Research and Technology (SMART) – Projects on autonomous wheelchairs in smart environments.

- University of Tsukuba (Japan) – Leading trials on voice and joystick-controlled AI wheelchairs.

CyberWorks Robotics – Autonomous WheelChair

Wheelchair AMR: Key Features

✓ Custom Designed for Airport & Hospital PRM Services

✓ World’s Most Popular Ultra-Heavy Duty Powered Chair

✓ No Visual Tags or Beacons

✓ FDA Certified Chair

✓ Local Service & Support

✓ Smallest Footprint for Storage & Agility

Predictive Collision Rapid Response (PCRR)

DESCRIPTION

Novel technology that allows our navigation system to provide rapid evasive reactions to obstacles faster than competitive systems.

PERFORMANCE COMPARISON

- Cyberworks Robotics: Our improved perception runs at 10 Hz enabling faster evasive reactions to obstacles, reducing reaction time by up to 50%, drastically improving operational safety and minimizes collision risks in dynamic environments.

- Competitive Alternatives: Slower reaction time with an average update rate of 2 Hz.

Visual Initial Orientation Referencing (VIOR)

DESCRIPTION

No need for physical markers! Our novel computer vision Machine Learning technology eliminates the need for placing visual markers in the building to establish the initial orientation of an AMR.

PERFORMANCE COMPARISON

- Cyberworks Robotics: Cyberworks proprietary technology makes sure the initial position and orientation of the robot is correct and doesn’t rely on any external visual makers like april tags.

- Competitive Alternatives: Requires placing visual markers which can be lost or misplaced and limits the number of localization spots.

Complex Crowd Navigation (CCN)

DESCRIPTION

Navigate through highly dynamic crowded environments!

PERFORMANCE COMPARISON

- Cyberworks Robotics: Cyberworks’ seamless integration of perception, navigation and control enables robots to handle dynamic, crowded environment with ease.

- Competitive Alternatives: Competitive navigation stacks have difficulty handling highly dynamic situations resulting in Stop-and-Go or unpredictable behaviours.

Transient Anomaly Recovery (TAR)

DESCRIPTION

Automatically detects and recovers from transient signal failures (like loose connections or intermittent sensors).

PERFORMANCE COMPARISON

- Cyberworks Robotics: Cyberworks TAR’ works in the background, monitoring metrics of the system to ensure all system’s parameters are in the expected range. Upon detection, failures gets classified while TAR automatically recovers the robot when possible.

Predictive Failure Blackbox Recorder (PFBR)

DESCRIPTION

An “aircraft style” blackbox recorder that capturing transient or intermittent failures to aid in rapid repairs.

PERFORMANCE COMPARISON

- Cyberworks Robotics: Cyberworks’ PFBR records all relevant information upon transient or intermittent failures occurring for rapid repairs by the support team.

Autonomous to Manual Toggling (AMT)

DESCRIPTION

Switch from Full Self-Driving to Smart Manual ADAS Modes on-the-fly.

PERFORMANCE COMPARISON

- Cyberworks Robotics: Let the user take control! Our smart obstacle detection system actively prevent collisions during manual navigation, while our interface continuously guides the user toward the goal throughout the entire process.

- Competitive Alternatives: This capability is not available in competitive systems while conventional stacks do not offer smart manual control with collision prevention and directional guidance.

Light Artifact Rejection Technology (LART)

DESCRIPTION

Sophisticated machine vision algorithms allow operation in areas of intense reflected light which causes competitive technologies to become confused by “false obstacles”.

PERFORMANCE COMPARISON

- Cyberworks Robotics: Our multi-level post-processing filtering for LiDAR and depth cameras are optimized for real-world applications to counter intense reflections of direct sunlight.

- Competitive Alternatives: Limited to the filtering available by the sensor’s processing unit which can’t handle intense reflected light and creates false obstacles.

Edge-Case Anomaly Shield (ECAS)

DESCRIPTION

Integration of over two dozen cutting-edge technology innovations, drastically reducing common autonomous navigation edge-case failures.

PERFORMANCE COMPARISON

- Cyberworks Robotics: Under 0.005 anomalous interventions per hour in a reference test setting at GRR airport.

- Competitive Alternatives: Compared to an average of 1 intervention per hour using competitive or open source navigation stacks.