Imagine writing: “warm lo-fi piano, gentle vinyl crackle, distant saxophone solo, 90 BPM, nostalgic evening” — and getting back a finished, multi-minute, mix-ready track that you can legally use in a podcast, ad, game or as the backing for your next viral short.

That future is here. Text-to-music models — sometimes called text-to-audio or text-to-music generators — are rapidly moving from research demos into production tools that are reshaping how music is composed, licensed and integrated into creative pipelines.

How text-to-music models work (short technical primer)

- Prompt → embedding → audio tokens: Most modern pipelines convert natural-language prompts into intermediate embeddings (semantic representation), then condition a model that outputs audio tokens (spectrograms, waveform tokens or diffusion steps). Some systems accept optional inputs like melody hummed by the user (conditioning), stems, or MIDI to add structure.

- Two common architectures: (1) autoregressive / token-based (generate raw audio or spectral tokens step by step — e.g., older research like OpenAI Jukebox) and (2) latent/diffusion or transformer models that generate spectrograms or latent audio then decode to waveform (used by many modern systems for better fidelity/control).

Why this is disruptive

- Democratizes composition — non-musicians can produce context-aware, original music instantly (pods, ads, creators).

- Massively lowers production cost & time — what used to take a studio and days can now be prototyped in seconds and mixed in minutes. (Enterprise models are already pitching multi-minute, near-studio quality outputs.)

- New creative workflows — hybrid human+AI songwriting, iterative prompt-based composition, API integration into games/apps for dynamic soundtracks.

- Licensing & business models changing — royalty-free, subscription and API pricing models replace bespoke licensing for many use cases — but major labels and copyright issues are shaping what features can ship publicly.

Key features that separate consumer from pro tools

When evaluating or building a text-to-music product, look for:

- Prompt expressivity & control — support for natural language plus tokens for tempo, key, instruments, stems or mood.

- Length & structure controls — ability to generate short loops, 30–180s full tracks, or multi-section songs with chorus/verse structure. (Some models now produce coherent multi-minute pieces.)

- Instrument / vocal fidelity — quality of synthesized instruments and realism of vocals (if offered). Some research models can emulate singer-like vocals; commercial offerings often avoid imitation of specific artists for legal reasons.

- Conditional inputs — melody upload (hum or MIDI), stem editing, or image→music conditioning for richer control.

- Mix & mastering tools — onboard effects, stem exports (separate drums/bass/lead), and mastering presets for quick release.

- Licensing clarity — commercial/royalty terms, safe mode (avoids imitating living artists), and attribution rules. This is a decisive product differentiator today.

- API & integration — embeddable REST/SDKs for games, video editors, DAWs, and streaming applications.

Products & Platforms

Meta — MusicGen (AudioCraft / MusicGen demo on Hugging Face) — research + open demos that turn text (and optional melody) into short musical clips; accessible via Hugging Face spaces and used by producers to prototype ideas. Good for experimenting with melody conditioning.

Stability AI — Stable Audio / Stable Audio 2.x — text-to-audio models that have shipped multi-minute music generation with studio-grade claims; Stability positions this line for enterprise sound production with models of varying sizes (including “open” and enterprise versions). Useful for teams needing longer coherent tracks and enterprise licensing.

Mubert — focused on royalty-free, promptable music for creators and product integrations; strong API and live soundtrack generation for apps, ads and streamers. Known for loop-ready outputs and commercial licensing.

Boomy — consumer product for instant song creation, distribution and monetization (streaming royalties). Extremely easy UX for creators who want immediate publishable tracks without music skills.

Soundful — targets creators and streamers with quick royalty-free track generation, stems/MIDI exports and DAW-friendly outputs. Good balance between ease of use and raw export flexibility.

AIVA — long-standing AI composer focused on scoring and style control; strong in producing themed pieces, film scoring cues and offering style models with MIDI/score outputs for downstream editing. Useful for composers wanting AI as a co-composer that outputs editable MIDI.

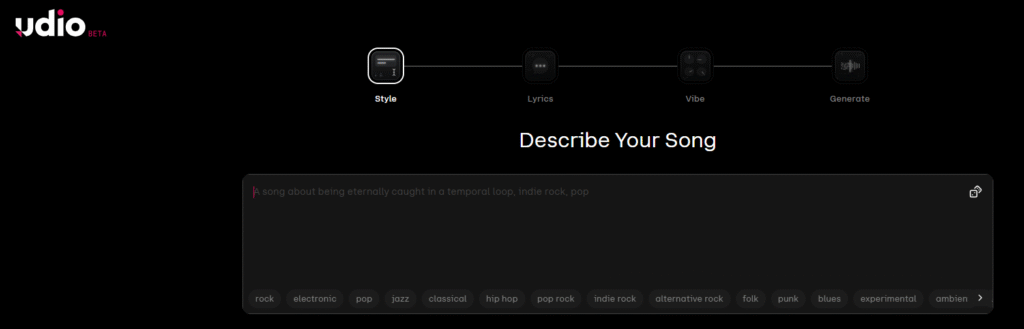

Udio | AI Music Generator – Official Website

Create your life’s soundtrack

Imagine creating personalized music for your life: a ballad for a romantic date, a banger for a night out with friends, a lo-fi track for meditation, or an upbeat song for your child’s birthday. If you can describe it in text, you can now express it in music.

A new tool for music makers

From award-winning producers to up-and-coming songwriters, Udio is a tool for realizing musical ideas. Professional musicians and passionate amateurs use Udio in their production process from the ideation stage to the generation of stems for commercial release. Export your creations to a DAW or upload your own audio to experience Udio’s state-of-the-art AI-editing tools.