Apple Intelligence is the personal intelligence system that brings powerful generative models to iPhone, iPad, and Mac. For advanced features that need to reason over complex data with larger foundation models, we created Private Cloud Compute (PCC), a groundbreaking cloud intelligence system designed specifically for private AI processing. For the first time ever, Private Cloud Compute extends the industry-leading security and privacy of Apple devices into the cloud, making sure that pers...

More

Author: IE Contributor

Kapton Tape Supercapacitors

Kapton tape supercapacitors built using Laser-Induced Graphene represent one of the most accessible and scalable approaches to flexible energy storage. By directly converting inexpensive polyimide into conductive, porous graphene foam, researchers can create high-performance supercapacitors suitable for wearables, IoT devices, robotics, and smart textiles.

LIG-based supercapacitors combine simplicity, low cost, flexibility, and high power density, positioning them as a cornerstone for the ne...

More

‘vsco.co’ – Visual Supply Company, a creative platform for photography and videography

VSCO (vsco.co) is much more than a photo-filter app. It’s a creative ecosystem: a place to shoot, edit, share, learn, and do business — all centered on aesthetics, craftsmanship, and community. Their shift into AI, pro tools, and brand marketplaces signals a mature, ambitious strategy to empower creators at scale while staying true to their roots.

Future Outlook

AI Expansion: Given the promise of semantic search in VSCO Hub and AI editing tools in AI Lab, VSCO is likely to continue b...

More

‘2wai.ai’ – AI avatar app that recreates deceased loved ones in interactive mode

2wai (pronounced “two-way”) is a consumer-facing mobile startup that lets people create and interact with lifelike “HoloAvatars” — AI-driven digital twins or avatars that look, speak and (the company says) remember like the person they represent.

The product is presented as a social app and “the human layer for AI”: users record short videos with a phone camera, upload them into the app, and within minutes can create an avatar they can chat with in real time and in many languages.

2wai i...

More

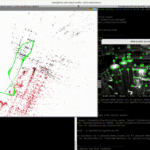

‘VSLAM: ORB-SLAM3’ –

SLAM(Simultaneous localization and mapping) is a major research problem in the robotics community, where a great deal of effort has been devoted to developing new methods to maximize their robustness and reliability.

VSLAM (visual SLAM) utilizes camera(s) as the primary source of sensor input to sense the surrounding environment. This can be done either with a single camera, multiple cameras, and with or without an inertial measurement unit (IMU) that measure translational and rotational...

More

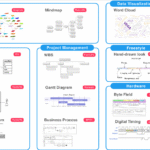

‘Kroki’ – create technology diagrams by providing simple text

Kroki transforms infrastructure, software systems, workflow models, and architecture diagrams into text-driven, reproducible assets. This makes it invaluable for:

Cloud architects

DevOps engineers

Technical documentation teams

Software designers

Research & academia

By treating diagrams as code, Kroki helps ensure accuracy, consistency, automation, and maintainability across technical documentation pipelines.

Kroki is an open-source diagram rendering service that conve...

More

‘Georeferencing Frames’

Georeferencing frames means assigning real-world coordinates (a spatial reference) to image or video frames so each pixel — and each camera pose — is expressed in a common coordinate system. In indoor contexts (where GNSS/GPS is unavailable or weak) georeferencing is done by fusing computer-vision (photogrammetry / Visual SLAM), sensor data (IMU, LiDAR), and control references (targets, surveyed control points or known anchors). This lets you convert raw video or 360° imagery into accurate 2D f...

More

AI Music Creation Using Text Prompt

Imagine writing: “warm lo-fi piano, gentle vinyl crackle, distant saxophone solo, 90 BPM, nostalgic evening” — and getting back a finished, multi-minute, mix-ready track that you can legally use in a podcast, ad, game or as the backing for your next viral short.

That future is here. Text-to-music models — sometimes called text-to-audio or text-to-music generators — are rapidly moving from research demos into production tools that are reshaping how music is composed, licensed and integrated ...

More

Toyota coms-x smart delivery vehicle

The Toyota COMS is a very small, single-seater electric vehicle (EV) developed by Toyota Auto Body (a subsidiary of Toyota Motor Corporation) in Japan.

“COMS-X Smart Delivery Vehicle” is essentially a commercial/utility variant of the COMS micro-EV platform, tailored for light-duty delivery and last-mile applications. In other words: a compact EV with a delivery box or container, built for urban logistics rather than private commuter use.

Vehicle class & platform

Single-seat...

More

‘Altium’ – electronic design automation (EDA) for PCB design and electronics product development.

Altium is one of the best-known vendors in electronic design automation (EDA) focused on PCB design and electronics product development. Its flagship desktop product, Altium Designer, together with cloud services such as Altium 365 and collaboration/enterprise offerings, positions the company as a one-stop environment for schematic capture, PCB layout, simulation, mechanical co-design, data management and manufacturing output. Below I’ll explain the platform, key technical details, how teams ty...

More

Google pomelli – Prompt based AI-powered marketing tool to help rapidly generate marketing campaigns

Pomelli is a newly announced AI-powered marketing tool developed by Google Labs in partnership with DeepMind. It is positioned as an “experiment” (i.e., early-stage product) aimed at helping small-to-medium sized businesses (SMBs) rapidly generate marketing campaigns that are on-brand, scalable, and streamlined.

The core idea: many SMBs don’t have large budgets, dedicated design/creative teams, or deep marketing resources. Pomelli aims to bridge that gap by “understanding” the business brand...

More

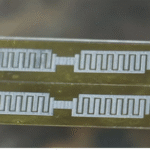

University of Pittsburgh – Amir Alavi & team have Build a Wireless Metamaterial Interbody Cage for Real-Time Assessment of Lumbar Spinal Fusion In Vivo

A collaboration between civil engineering and neurosurgery at the University of Pittsburgh could change how spinal fusion surgery is performed and monitored. Associate Professors Amir Alavi, Nitin Agarwal, and D. Kojo Hamilton have received a $352,213 National Institutes of Health (NIH) R21 grant to develop the first self-powered spinal implant capable of transmitting real-time data from inside the body.

The transdisciplinary project, “Wireless Metamaterial...

More

‘VIGOZ’ combines cycling and electric vehicle in one

French mobility-startup CIXI (based in Annecy, Haute-Savoie) is developing a novel “active vehicle” called the VIGOZ that seeks to combine elements of cycling and electric vehicle driving into one platform. The slogan: a vehicle you pedal, that still functions on highways, giving you both physical activity plus transport.

The Concept & Vision

CIXI positions the VIGOZ as part of a shift from passive commuting to active mobility. Founder Pierre Francis describes how everyday commuting (...

More

Screenbox open source media player for windows

Screenbox is an open-source, LibVLC-based media player built specifically for Windows (UWP/WinUI) that aims to combine VLC-level codec support with a modern, Fluent-style Windows UI, picture-in-picture, media-library browsing, network playback and Xbox compatibility. It’s actively developed on GitHub, distributed through the Microsoft Store (and winget), licensed under GPL-3.0, and has become a popular “VLC alternative” for users who prefer a sleeker Windows-native experience.

Modern Fluent ...

More

AI DJ

machine learning and real-time audio tools now help pick tracks, beatmatch, isolate stems (vocals/drums/bass), create seamless transitions, and even speak or respond like a radio DJ. Some tools are aimed at casual listeners (auto-mixing your playlists), others add AI as creative assist to working DJs and producers (real-time stem separation, automated transitions, tempo/key matching), and a few are hybrid systems that let you perform or run background mixes with minimal effort.

Better real-t...

More

PRIMA BCI Implant Restores Functional Central Vision to Patients with Geographic Atrophy Caused By Age-Related Macular Degeneration

A tiny wireless subretinal implant called PRIMA—a photovoltaic microchip placed beneath the retina and used with a camera-equipped pair of glasses and a pocket processor—has been shown in a multicenter clinical trial to partially restore functional central vision in patients with geographic atrophy (advanced “dry” AMD). At 12 months many trial participants could read letters, numbers and words using the system; mean gains on a standard ETDRS chart were large and clinically meaningful.

A reti...

More

Small AI Model for Image Geolocation

Determining where a photo was taken from pixels alone — image geolocation — is one of the most exciting and practical computer-vision tasks. It’s useful for OSINT/GEOINT, content moderation, user-photo enrichment, heritage/archaeology, wildlife tracking, and fraud detection. Historically this field used huge databases or heavy models; in recent years researchers and engineers have shown that small, efficient models — or small pipelines combining lightweight models with clever retrieval — can de...

More

ChatGPT as your personal shopper with ‘Instant Checkout’

Imagine you’re chatting with ChatGPT: you say “I’d like a durable hiking backpack for weekend trips, under $150” or perhaps “surprise me with a thoughtful birthday gift for my aunt who loves gardening and reading”. Instead of just listing links, ChatGPT presents tailored product recommendations, helping you compare features, read summaries of reviews, evaluate price-versus-value, and then (here’s the kicker) lets you go from conversation → click to buy without ever leaving the chat. That’s the ...

More

AI Orchestration Platform

Helps design, control, and scale AI workflows (prompts, APIs, usage) with governance built in. Promptetus

Routing of prompts, integration of APIs, monitoring of usage.Why it stands out: Focused on the workflow/orchestration side (rather than just infra). Good if you need to coordinate multiple models, tools, and agents.Potential trade-offs: May be less focused on low-level infrastructure (e.g., GPU scheduling) and more on the orchestration above that.Use-case fit: If you are building multipl...

More

AI-native commerce and lock-screen platform

AI-native commerce lock-screen platform turns passive device surfaces (phone lock screens, idle TV screens) into proactive, inspiration-first shopping experiences by combining generative visual models, personalization engines, visual search, and commerce orchestration. Companies such as Glance have productized this idea by embedding generative, shoppable visuals directly onto millions of devices via OEM partnerships.

AI-native commerce and lock-screen platform use generative visual models, p...

More

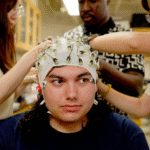

University of Illinois Urbana-Champaign – Monitoring stress from the surface of the body

Monitoring stress from the surface of the body

Researchers at the University of Illinois Urbana-Champaign are exploring how wearables and surface sensors can provide insight into human stress — mental, emotional, physiological — by measuring signals from the body surface. The work is part of the Carle Illinois College of Medicine’s Department of Biomedical and Translational Sciences, with interdisciplinary collaboration spanning bioengineering, health & kinesiology, neuroscience, and beh...

More

DGIST South Korea – robot animals

Nature-inspired robots from DGIST students — what they built and what they can do

Students and researchers at the Daegu Gyeongbuk Institute of Science & Technology (DGIST) in South Korea have produced a string of small, clever biomimetic robots — from snake-like crawlers to a slow, motor-driven tortoise — as part of hands-on student projects and research lab work. These systems are usually built as research and teaching platforms to explore locomotion, perception, and real-world use case...

More

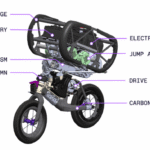

‘Ultra Mobile Vehicle’ – two-wheeled robot developed by the RAI Institute

The Ultra Mobile Vehicle (UMV) is a research robot from the Robotics and AI (RAI) Institute that looks at mobility in a new way: combine the efficiency and speed of wheels with the jumping and obstacle-clearing abilities usually reserved for legged robots. The result is a two-wheeled, self-balancing “robot bike” that drives, hops, flips, holds sustained wheelies, and performs dramatic precision balance moves like a track-stand — and it learns most of those behaviors through reinforcement learni...

More

‘littlebits’ – STEAM Kit’s for kids

littleBits was founded in September 2011 in New York City by Ayah Bdeir, with the mission of making electronics and invention accessible to everyone — of democratizing hardware the same way software and 3D printing have become accessible.

The idea was to create modular, snap-together electronic building blocks (called “Bits”) that don’t require soldering, wiring, or deep electronics experience. The goal: let novices, designers, educators, and tinkerers invent and prototype without getting bo...

More

open-weights-AI models

Artificial intelligence has traditionally advanced through a mix of academic research (open publishing) and industrial deployment (closed systems). In recent years, large-scale AI models — especially large language models (LLMs), multimodal models, and foundation models — have become the backbone of generative AI.

A key distinction in this field is whether models are closed-weights (only accessible via APIs, no direct access to parameters) or open-weights (developers can download and run the...

More

‘generative ui’ – dynamically generated by AI Adaptive Interfaces

Generative UI is an emerging design and engineering paradigm where user interfaces are not fixed layouts but are dynamically generated by AI models based on context, user intent, device form factor, and application state. Instead of manually crafting every screen and state, developers define higher-level rules, design tokens, and interaction patterns; the system then generates UI elements on demand.

This approach is being pioneered in research, by big tech companies (Microsoft, Google, OpenA...

More

‘Moflin’ – AI-pet robot from Casio (developed with Vanguard Industries

Moflin is a robotic companion pet with soft fur, minimal visible features, and “emotional” behavior. The idea is not to mimic a full, animate animal like a dog or cat, but to provide a comforting presence, reacting to human interaction in ways meant to feel tender and companionship-oriented. It is marketed toward people wanting some emotional support or companionship without the responsibilities of owning a live pet.

It learns over time: its behavior, responses, personality traits change...

More

“AI talent studio” –AI Actress Tilly Norwood

Tilly Norwood is an AI-generated actress created by Eline Van der Velden and her company, Xicoia, sparking significant debate in Hollywood regarding the future of acting and AI's role in the industry.

Tilly Norwood is a synthetic, AI-generated performer introduced in 2025 by Xicoia (the AI/talent arm of Particle6). Marketed like a rising screen star — with an Instagram, publicity shots, a satirical sketch called AI Commissioner, and a Zurich Film Festival debut.

Particle6/Xicoia have ...

More

‘scripps.edu’ –

Scripps Research is pioneering a new model for nonprofit research institutes to address the significant health challenges facing humanity in the 21st century, from pandemic threats to the aging of the global population. Founded 100 years ago, Scripps Research is renowned in the life sciences and chemistry for scientific excellence and for training the scientific leaders of tomorrow.

Building on this solid foundation, we have established a unique model that seamlessly combines world-class fun...

More

‘Embrace2’ – wrist-worn medical device to monitor and alert for generalized tonic-clonic seizures (GTCS)

Embrace2 was a wrist-worn medical device developed by Empatica for people living with epilepsy. It is FDA-cleared (for ages 6 and up) to monitor and alert for generalized tonic-clonic seizures (GTCS), also known as convulsive seizures. It uses sensors on the wrist to detect certain physiological signals and motion patterns, triggering alerts to caregivers when a seizure is detected.

EpiMonitor is the next generation epilepsy monitoring system Empatica is promoting, intended to replace/upgrad...

More