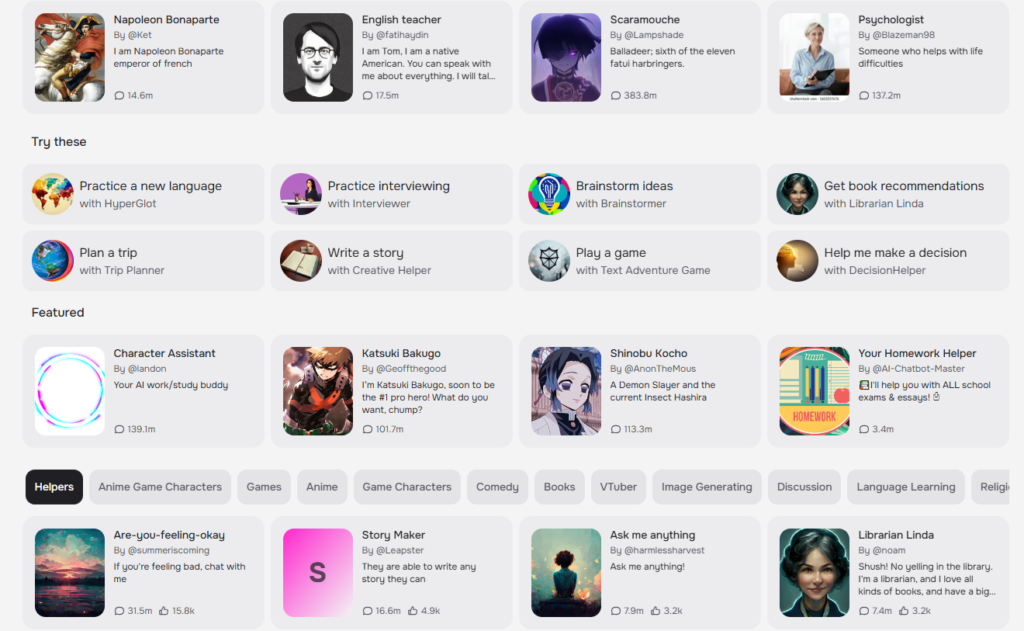

Character.ai is a platform where you can chat with AI characters based on famous or original personas. You can choose from a variety of categories, such as games, movies, books, or genres, and interact with the AI in different ways.

Users can create “characters”, craft their “personalities”, set specific parameters, and then publish them to the community for others to chat with. Many characters may be based on fictional media sources or celebrities, while others are completely original, some being made with certain goals in mind such as assisting with creative writing or being a text-based adventure game.

Built from advanced deep learning and expansive language models.

Character “personalities” are designed via descriptions from the point of view of the character and its greeting message, and further molded from conversations made into examples, giving its messages a star rating and modification to fit the precise dialect and identity the user desires.

At Character.AI, we’re building toward AGI. In that future state, large language models (LLMs) will enhance daily life, providing business productivity and entertainment and helping people with everything from education to coaching, support, brainstorming, creative writing and more.

To make that a reality globally, it’s critical to achieve highly efficient “inference” – the process by which LLMs generate replies. As a full-stack AI company, Character.AI designs its model architecture, inference stack and product from the ground up. And we’re excited to share that we have made a number of breakthroughs in inference technology – breakthroughs that will make LLMs easier and more cost-effective to scale to a global audience.

Our inference innovations are described in a technical blog post released today and available here. In short: Character.AI serves around 20,000 queries per second – about 20% of the request volume served by Google Search, according to public sources. We manage to serve that volume at a cost of less than one cent per hour of conversation. We are able to do so because of our innovations around Transformer architecture and “attention KV cache” – the amount of data stored and retrieved during LLM text generation – and around improved techniques for inter-turn caching.

These innovations, taken together, make serving consumer LLMs much more efficient than with legacy technology. Since we launched Character.AI in 2022, we have reduced our serving costs by at least 33X. It now costs us 13.5 times less to serve our traffic than it would cost a competitor building on top of the most efficient leading commercial APIs.

These efficiencies clear a path to serving LLMs at a massive scale. Assume, for example, a future state where an AI company is serving 100 million daily active users, each of whom uses the service for an hour per day. At that scale, with serving costs of $0.01 per hour, the company would spend $365 million per year – i.e., $3.65 per daily active user per year – on serving costs. By contrast, a competitor using leading commercial APIs would spend at least $4.75 billion. Continued efficiency improvements and economies of scale will no doubt lower those numbers for both us and competitors, but these numbers illustrate the importance of our inference improvements from a business perspective.

Put another way, these sorts of inference improvements unlock the ability to create a profitable B2C AI business at scale. We are excited to continue to iterate on them, in service of our mission to make our exciting technology available to consumers around the world to use throughout their day.