Georeferencing frames means assigning real-world coordinates (a spatial reference) to image or video frames so each pixel — and each camera pose — is expressed in a common coordinate system. In indoor contexts (where GNSS/GPS is unavailable or weak) georeferencing is done by fusing computer-vision (photogrammetry / Visual SLAM), sensor data (IMU, LiDAR), and control references (targets, surveyed control points or known anchors). This lets you convert raw video or 360° imagery into accurate 2D floorplans, 3D meshes/point clouds, and maps that an indoor navigation engine can use.

Step-by-Step engineering pipeline for turning video frames into a georeferenced indoor map, and practical instructions for using that map for indoor wayfinding.

1) Core methods & building blocks (what under the hood)

- Feature-based photogrammetry — detect keypoints (SIFT / ORB / AKAZE), match across frames, and compute relative poses. With many overlapping frames you run bundle adjustment to solve camera intrinsics/extrinsics and 3D point positions (sparse point cloud). This is the classic SfM (Structure from Motion) / photogrammetry approach. ArcGIS Pro

- Visual Simultaneous Localization And Mapping (Visual SLAM) — a real-time pipeline for tracking camera pose while incrementally building a map. Modern Visual SLAM adds loop-closure detection, relocalization and scale recovery (using stereo/RGB-D or IMU). Libraries such as ORB-SLAM3 and RTAB-Map are reference implementations that support monocular, stereo and RGB-D setups and are used in robotics and mapping systems. GitHub+1

- Sensor fusion (VIO / LIDAR + vision) — fusing IMU data (Visual-Inertial Odometry) fixes scale and improves robustness during motion blur or low-texture areas; LIDAR supplies metric depth and dense point clouds that reduce drift and improve alignment for large buildings. CMU Biorobotics

- Control/anchor-based georeferencing — introduce known points (surveyed control points, floorplan tie-points, QR markers, BLE/Wi-Fi anchors, or surveyed LiDAR control) and compute a 7-DOF similarity (scale, rotation, translation) or full affine transformation to align the locally-built map to the building coordinate frame or GIS reference. — essentially, map-to-world registration. ArcGIS Pro

2) End-to-end technical pipeline — from video to georeferenced map

This is a practical, engineer-oriented pipeline. For each step I add common tools/algorithm choices.

Step 0 — Decide sensor & data capture method

- Choices: smartphone (RGB + IMU), 360° camera, RGB-D (Azure Kinect / RealSense), stereo camera, or LiDAR/multi-cam mobile mapping (NavVis, Matterport Pro).

- Tradeoffs: smartphone is cheap but noisy; RGB-D gives metric depth and easier scale; LiDAR/mobile mapping gives survey-grade point clouds for high-accuracy georeferencing. NavVis+1

Step 1 — Data acquisition & metadata capture

- Walk planned trajectories ensuring overlap and loop closures; capture IMU at device rate. For video: 30–60% frame overlap is a practical target (more overlap → better matches).

- If available, capture occasional GNSS fixes near building entrances to provide coarse geo-tags for exterior matching.

- Place a small set of surveyed control points (optical targets or AR markers) at known coordinates if you require metric geo-registration indoors.

Step 2 — Frame extraction & preprocessing

- Extract frames from video at 1–5 fps for mapping (denser for high-speed motion).

- Undistort frames with camera calibration (intrinsics). Use calibration tools (OpenCV, Kalibr) to compute focal length, principal point and lens distortion.

- Optionally run image enhancement (denoise, exposure normalization) for low-light indoor footage.

Step 3 — Feature detection & matching

- Use ORB / AKAZE for speed, SIFT / SURF for more robust matching (licensing considerations).

- Perform pairwise matching with ratio tests and geometric outlier rejection (RANSAC Essential/Fundamental matrix) to compute relative pose hypotheses.

Step 4 — Pose graph & bundle adjustment (SfM stage)

- Build a pose graph where nodes = keyframes and edges = relative poses.

- Perform global bundle adjustment (sparse Levenberg–Marquardt) to optimize camera poses and 3D landmarks. Use toolkits like COLMAP, OpenMVG, or a SLAM backend (g2o / Ceres). This yields a consistent sparse 3D map and camera trajectory.

Step 5 — Sensor fusion (optional but recommended)

- Fuse IMU (VIO) to recover metric scale and reduce drift; if you have depth (RGB-D or LiDAR), fuse for dense reconstruction (TSDF fusion or Poisson surface reconstruction). Libraries: ORB-SLAM3 (VIO), RTAB-Map (RGB-D/LiDAR integration), OpenVSLAM. GitHub+1

Step 6 — Dense reconstruction & meshing (if needed)

- From aligned camera poses + depth, create dense point cloud (MVS / depth fusion). Tools: OpenMVS, COLMAP dense, or LiDAR-based meshing for higher fidelity.

Step 7 — Georeferencing / registration to building coordinates

- Use control points (surveyed coordinates) or tie to an existing CAD/BIM floorplan: compute the best-fit similarity transform (7-parameter Helmert) or ICP (for point clouds) to align the SLAM/SfM map with the building coordinate system. Store transform as metadata (ECEF/local building grid). ArcGIS and GIS tools provide standard georeferencing utilities for rasters and point clouds. ArcGIS Pro

Step 8 — Export & packaging

- Export floorplans (2D schematics), geo-referenced point clouds (.las/.e57), textured meshes (.obj/.ply), and camera trajectory (poses in JSON). Include the transform/CRS metadata so other systems can locate the map in the same local coordinate frame.

3) Building an indoor wayfinding system from the georeferenced map

Once you have a georeferenced map and camera poses, follow this design to create wayfinding:

A. Map abstraction & graph generation

- Floorplan extraction — convert dense reconstruction into 2D floor polygons (walls, doors, obstructions). This can be automated by slicing point clouds by height and applying morphological processing + vectorization.

- Navigation graph — build a walkable graph (nodes = POIs, intersections; edges = corridors/stairs) and annotate with edge weights (distance, travel time, accessibility). Include multi-floor connectors (elevators, stairs) with floor transitions.

- Semantic enrichment — tag nodes with metadata: room numbers, POIs, amenities, safety exits, and AR anchor IDs.

B. Real-time localization for the user

Implement one or a hybrid of the following localization methods (ideally multi-modal fusion):

- Visual relocalization: compare live camera frames to the georeferenced image database (place recognition / feature matching / image retrieval) and compute the camera pose via PnP — robust for AR guidance.

- Visual-Inertial Odometry (VIO): track the device’s relative motion between relocalizations.

- Beacon/Radio positioning: BLE/Wi-Fi RTT/UWB provide coarse/fine priors and can be fused with VIO for bootstrapping & outage handling.

- LiDAR or depth-based localization: for robots or specialized devices, match live LiDAR scans to the global point cloud.

- Combine via an EKF / factor graph to produce the best estimate of the user pose.

(Visual relocalization is especially useful in AR smartphone apps because each matched image directly anchors AR overlays to a known camera pose.)

C. Path planning & UX

- Run A* or Dijkstra on the navigation graph. For accessibility, apply cost penalties for stairs, narrow corridors, etc.

- For AR guidance: project the planned path into camera coordinates (using the live pose) and render wayfinding cues as arrows, breadcrumbs, or billboarded POI labels. For purely 2D apps, render a “blue dot” with a mini-map and turn-by-turn instructions.

- Implement continuous relocalization checkpoints every N meters or when visual confidence drops.

D. Offline & drift recovery strategies

- Persist recent camera poses & visual descriptors locally for quick relocalization when network is absent.

- Periodically re-anchor to known control points (floor markers / QR tags / BLE beacons) to remove accumulated drift.

- Use semantic cues (recognizable signage, room labels) for human-in-the-loop corrections when automatic relocalization fails.

4) Accuracy considerations & best practices

- Control points matter: a few well-surveyed indoor control points reduce global alignment error dramatically. If you need sub-meter accuracy, include at least 3–5 surveyed anchors per floor.

- Ensure coverage & loop closures: plan capture paths that loop back and revisit areas to strengthen loop closure detection in SLAM and reduce drift.

- Use RGB-D or LiDAR where possible: depth sensors give metric scale and dense geometry — vital for robust map-to-world registration in feature-poor corridors.

- Lighting & texture: low-light or repeated textures (long white corridors) break feature matching. Supplement with active markers (AprilTags/ArUco) or use depth sensors.

- Privacy & data governance: indoor imagery may capture people; implement blur/anonymization and follow organizational privacy rules.

5) Key platforms, products & open-source stacks

Below I group products so you can choose by use-case: survey-grade capture, developer SDKs for indoor positioning/wayfinding, and open-source SLAM stacks.

Survey / reality-capture (high-accuracy, often LiDAR)

- NavVis (M6, VLX, IndoorViewer) — mobile mapping hardware + digital twin platform; captures survey-grade point clouds and panoramic imagery and supports georegistration / export for downstream use. Great when you need enterprise-scale, accurate digital twins.

- Matterport (Pro3, Lidar-enabled capture + cloud processing) — simplified capture-to-digital-twin workflow (smartphone capture to Pro cameras), strong for real-estate, facilities and quick 3D models plus floorplans.

Indoor positioning & wayfinding SDKs / platforms

- IndoorAtlas — sensor-fusion indoor positioning SDK (geomagnetic + sensors + calibration) plus mapping and wayfinding APIs; used in airports, hospitals for smartphone wayfinding.

- Pointr — commercial indoor location & wayfinding platform focused on accuracy and enterprise integration (retail, airports, hospitals).

- Mapwize / Mappedin — indoor mapping & wayfinding platforms that integrate maps with location backends and provide route UX for kiosks and mobile apps.

AR / vision SDKs (for visual relocalization & anchors)

- Vuforia Engine — image/object-based AR anchors, integration guides for HoloLens/Unity; useful for marker-based georeferencing and industrial AR overlays.

- ARKit / ARCore — platform-native AR with relocalization, cloud anchors, and Visual-Inertial tracking; both can be components of a visual relocalization + wayfinding solution (ARKit Location Anchors / ARCore Cloud Anchors patterns). (Platform docs are the canonical source for implementation patterns.)

Open-source SLAM & mapping stacks (developer & research)

- ORB-SLAM3 — state-of-the-art Visual/VIO SLAM supporting monocular/stereo/RGB-D and multi-map setups; excellent for research/prototyping.

- RTAB-Map — open-source RGB-D / LIDAR friendly SLAM with long-term mapping and ROS integration suitable for robots and mobile mapping.

- OpenVSLAM / OpenMVG / COLMAP — tools for offline SfM / multiview reconstruction and relocalization pipelines. (COLMAP is great for batch SfM; OpenVSLAM for mobile-friendly relocalization.)

6) Example implementation scenarios (quick recipes)

Prototype (phone-only, low-cost)

- Use an Android phone (ARCore) or iPhone (ARKit) to record stabilized video + IMU.

- Extract frames, run OpenVSLAM / ORB-SLAM3 to get camera poses.

- Manually place 3–4 QR markers at known coordinates for map-to-world alignment.

- Use visual relocalization on-device for AR wayfinding overlays. (Good for pilot apps.)

Enterprise (survey-grade, robust wayfinding)

- Scan with NavVis VLX or Matterport Pro3 to get georegistered point clouds & panoramic imagery.

- Import the point cloud into a GIS/BIM tool; align to building coordinate system with survey control points.

- Use IndoorAtlas or Pointr for live positioning via smartphones, and Mapwize for route UX and kiosk integration.

7) Practical checklist & pitfalls to avoid

- Checklist before capture: camera calibrated, IMU logging enabled, control points marked, capture path planned with loops.

- Pitfalls: relying purely on monocular SLAM in long featureless corridors (drift), ignoring multi-floor transitions, not planning for privacy/anonymization, and skipping post-capture registration to a known coordinate frame (causes inconsistent maps).

- Measure success: validate by surveying 5–10 check points and compute RMSE of transformed map vs surveyed coordinates. For indoor wayfinding, aim for sub-2m accuracy for casual navigation and sub-0.5m for AR-guided tasks.

8) Where to learn & next steps (resources)

- Read Visual SLAM literature surveys for algorithmic tradeoffs (e.g., visual localization under GNSS-denied conditions).

- Try ORB-SLAM3 on sample video to understand pose graphs and loop closures.

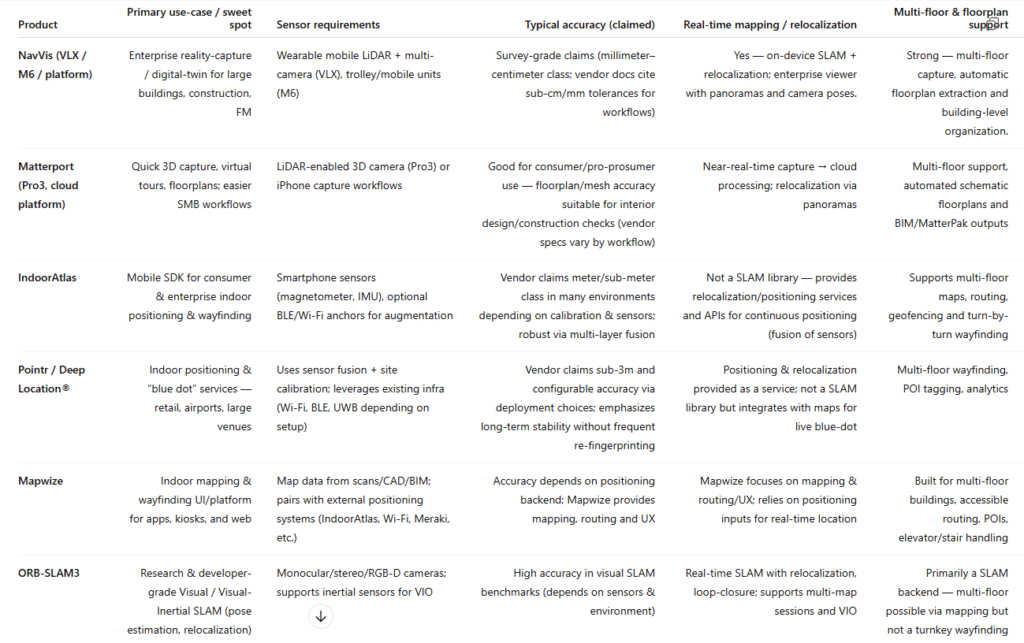

Product Comparison — NavVis, Matterport, IndoorAtlas, Pointr, Mapwize, ORB-SLAM3, and RTAB-Map

Unlock Smart Spaces with IndoorAtlas | Indoor Location-Based Solutions

Pointr – AI Mapping, Wayfinding, & Positioning

Create Interactive Maps Online | Mapize – Mapize

VSLAM: ORB-SLAM3 — Intel Embodied Intelligence SDK Intel Embodied Intelligence SDK documentation