Building Advanced AI Workflows with LangGraph

Introduction to LangGraph

LangGraph is a powerful framework designed to facilitate the construction of complex, multi-step AI workflows. It is built on top of LangChain and provides a graph-based execution model, enabling the creation of dynamic and modular workflows with conditional branching, parallel processing, and loop mechanisms.

This article will cover:

- Core concepts of LangGraph

- Building a simple AI workflow

- Implementing conditional branching

- Running parallel processing in AI tasks

1. Core Concepts of LangGraph

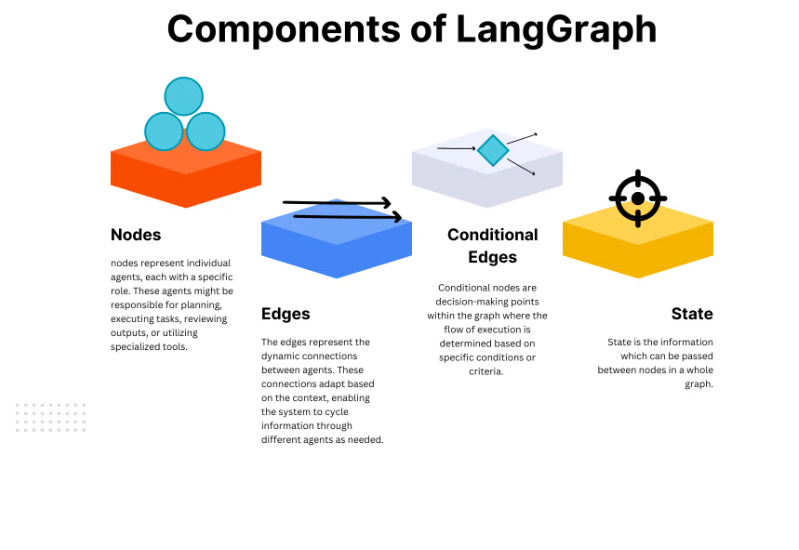

LangGraph introduces directed graphs as a way to manage the execution flow of AI applications. The key components are:

- Nodes: Represent individual steps in the workflow.

- Edges: Define the flow of execution.

- State: Stores and updates information throughout the process.

Installation

Before using LangGraph, install it along with LangChain:

bashCopyEditpip install langgraph langchain openai

2. Building a Simple AI Workflow

Let’s start by creating a basic workflow that processes a user query through an LLM.

Step 1: Define the LLM Model

We’ll use OpenAI’s API for generating responses.

pythonCopyEditfrom langchain.chat_models import ChatOpenAI

from langgraph.graph import StateGraph

from langgraph.graph.message import add_messages

from typing import TypedDict, List

# Define the state structure

class ChatState(TypedDict):

messages: List[str]

# Initialize the model

llm = ChatOpenAI(model="gpt-4", temperature=0.7)

# Define the function that interacts with the LLM

def llm_step(state: ChatState) -> ChatState:

response = llm.predict(state["messages"][-1]) # Use last message

return {"messages": state["messages"] + [response]}

Step 2: Create the Graph

We define a graph with a single processing node.

pythonCopyEdit# Create a graph

workflow = StateGraph(ChatState)

workflow.add_node("llm", llm_step)

workflow.set_entry_point("llm")

# Compile the graph

app = workflow.compile()

# Run the workflow

input_state = {"messages": ["What is LangGraph?"]}

output = app.invoke(input_state)

print(output["messages"])

This simple graph takes a message, processes it with GPT-4, and returns a response.

3. Implementing Conditional Branching

In real-world applications, we may need to route the conversation differently based on the content. Let’s add a decision node.

Step 1: Define the Decision Function

We create a function to determine whether a question is about technology or general knowledge.

pythonCopyEditdef classify_question(state: ChatState) -> str:

last_message = state["messages"][-1].lower()

if "ai" in last_message or "langgraph" in last_message:

return "tech_node"

return "general_node"

Step 2: Modify the Graph

We update the graph to include branching logic.

pythonCopyEdit# Define response functions

def tech_response(state: ChatState) -> ChatState:

return {"messages": state["messages"] + ["This is a tech-related question."]}

def general_response(state: ChatState) -> ChatState:

return {"messages": state["messages"] + ["This is a general question."]}

# Modify the workflow

workflow = StateGraph(ChatState)

workflow.add_node("llm", llm_step)

workflow.add_node("tech_node", tech_response)

workflow.add_node("general_node", general_response)

# Add conditional branching

workflow.add_conditional_edges("llm", classify_question, {"tech_node", "general_node"})

workflow.set_entry_point("llm")

app = workflow.compile()

# Test with a tech-related question

input_state = {"messages": ["How does LangGraph work?"]}

output = app.invoke(input_state)

print(output["messages"])

This setup dynamically directs the response based on the content of the user’s message.

4. Running Parallel Processing

LangGraph supports parallel execution, useful for tasks like summarizing text while extracting keywords simultaneously.

Step 1: Define Parallel Functions

pythonCopyEditfrom langchain.text_splitter import CharacterTextSplitter

# Summarization function

def summarize_text(state: ChatState) -> ChatState:

text = state["messages"][-1]

response = llm.predict(f"Summarize this: {text}")

return {"messages": state["messages"] + [f"Summary: {response}"]}

# Keyword extraction function

def extract_keywords(state: ChatState) -> ChatState:

text = state["messages"][-1]

response = llm.predict(f"Extract keywords from this: {text}")

return {"messages": state["messages"] + [f"Keywords: {response}"]}

Step 2: Modify the Graph

pythonCopyEdit# Define the workflow

workflow = StateGraph(ChatState)

workflow.add_node("summarize", summarize_text)

workflow.add_node("keywords", extract_keywords)

# Run both nodes in parallel

workflow.add_edge("summarize", "end")

workflow.add_edge("keywords", "end")

workflow.set_entry_point("summarize", "keywords")

app = workflow.compile()

# Test parallel execution

input_state = {"messages": ["LangGraph enables graph-based AI workflows."]}

output = app.invoke(input_state)

print(output["messages"])

Here, the system summarizes and extracts keywords simultaneously before finalizing the response.