NVIDIA officially launched its most advanced artificial intelligence computing platform yet — the Vera Rubin AI platform. Named in honor of Vera Florence Cooper Rubin, a pioneering American astronomer whose work reshaped our understanding of the cosmos, the Vera Rubin platform represents a major leap forward in AI supercomputing architecture. Whereas previous systems focused primarily on GPUs alone, Vera Rubin treats the entire data center rack as a cohesive, unified AI machine, designed to accelerate training, inference, and reasoning workloads for the most demanding AI models in existence.

A Unified AI Supercomputer: Architecture and Purpose

At its core, the Vera Rubin platform is not a single chip, but a rack-scale AI supercomputer — a highly integrated system combining multiple purpose-built processors and networking components into a single, modular architecture. This design reflects NVIDIA’s view that future AI demands cannot be met by isolated components, but by fully integrated compute engines that blend CPUs, GPUs, high-speed interconnects, secure infrastructure, and next-generation networking.

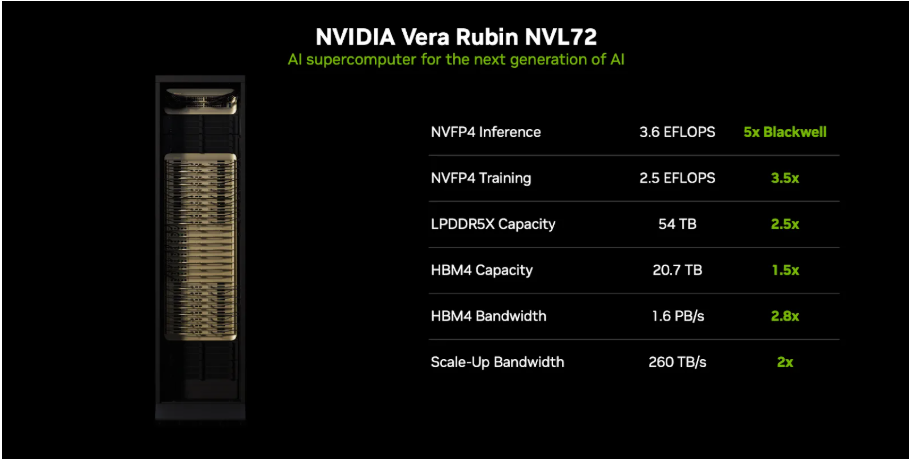

The flagship implementation of this technology is the NVIDIA Vera Rubin NVL72 system. A complete NVL72 rack aggregates:

- 72 NVIDIA Rubin GPUs — next-generation GPU architecture optimized for large-context AI workloads.

- 36 NVIDIA Vera CPUs — energy-efficient Arm-based processors designed specifically for agentic AI and reasoning tasks.

- NVIDIA NVLink 6 switch — ultra-high-bandwidth fabric that connects all processors with minimal latency.

- NVIDIA ConnectX-9 SuperNICs — advanced networking processors for high-throughput I/O.

- NVIDIA BlueField-4 DPUs — programmable data processing units that offload networking, security, and storage tasks.

- Spectrum-X Ethernet / InfiniBand networking — next-generation Ethernet and InfiniBand switching fabrics to scale between racks.

This tightly code-signed hardware ecosystem is engineered to behave as one massive compute engine instead of disparate parts — enabling unprecedented levels of efficiency, performance, and scalability.

Performance Breakthroughs and Efficiency Gains

One of the most compelling aspects of the Vera Rubin platform is its performance leap over prior NVIDIA architectures, notably the Blackwell generation that dominated the AI accelerator market in the early 2020s:

- The Rubin GPU architecture is engineered with a third-generation Transformer Engine, which delivers up to 50 petaflops of NVFP4 compute for AI inference — enabling advanced reasoning tasks at lower precision with high throughput.

- Compared with Blackwell systems, training large “mixture of experts” (MoE) models on Vera Rubin can require up to 4× fewer GPUs, drastically reducing infrastructure footprint and cost.

- On the inference side — where models respond to real-world queries — Rubin’s architecture can cut token processing costs by as much as 10×, enabling much more efficient deployment of large AI services.

- The platform benefits from advanced memory structures and AI-native storage (Inference Context Memory Storage) to handle long-context workloads (millions of tokens) with predictable performance at scale.

Security, Scalability, and Manageability

Beyond performance, the platform incorporates third-generation Confidential Computing and advanced operational resilience, which help protect proprietary models and tenant workloads across multi-user data centers.

BlueField-4’s Advanced Secure Trusted Resource Architecture (Astra) extends security and policy enforcement into the networking fabric, letting administrators provision and isolate infrastructure without sacrificing performance. This architecture makes scale out safe for multi-tenant cloud environments where different customers or services share hardware resources.

The platform’s modular, cable-free rack design also accelerates assembly and servicing — allowing a full system to be assembled far faster and maintained with reduced downtimes compared with traditional server racks.

Variants Designed for Versatility

While the NVL72 rack system is the flagship rack-scale implementation, NVIDIA’s Rubin family includes multiple variants to suit different workloads:

- DGX Vera Rubin NVL72 — NVIDIA’s turnkey enterprise-ready supercomputing solution built on the NVL72 architecture for immediate deployment.

- DGX Rubin NVL8 — A liquid-cooled system incorporating eight Rubin GPUs, designed for flexible deployment in data centers and research environments.

- Vera Rubin NVL144 CPX — A massive context-optimized rack that integrates Rubin CPX GPUs (purpose-built for million-token inference and generative video workloads) along with standard Rubin GPUs and Vera CPUs to deliver 8 exaflops of AI compute and up to 100 TB of high-speed memory in a single rack.

This expanding portfolio enables organizations of all sizes — from cloud providers to research labs — to tailor their infrastructure around the complexity and scale of their AI workloads.

The Vera Rubin platform is designed to be ecosystem-friendly. Major cloud providers — including AWS, Google Cloud, Microsoft Azure, and OCI — plan to deploy Rubin-based instances, making advanced AI compute accessible to developers and enterprises globally. NVIDIA ecosystem partners like CoreWeave, Lambda, Nebius, and Nscale are also integrating Rubin systems into their cloud offerings.

AI research labs and enterprises such as Anthropic, Meta, OpenAI, Cohere, Perplexity, Runway, xAI, and numerous others intend to leverage Rubin infrastructure for training and serving large multimodal and reasoning models. This extensive adoption signals broad industry confidence in Vera Rubin as the foundation for the next era of AI innovation.

NVIDIA Vera Rubin platform represents a paradigm shift in AI infrastructure:

- Chip-to-rack design coherence removes the bottlenecks of traditional hardware silos.

- Ultra-efficient performance per watt and per token drives down costs for large models.

- Security and manageability are built in at scale for multi-tenant environments.

- Modular systems let enterprises deploy exactly the size of supercomputer they need.

As AI models become larger, more capable, and more central to business and research, platforms like Vera Rubin provide the unified compute backbone necessary to support them — from training to real-time, long-context inference — while ensuring performance, efficiency, and secure operation.

Inside the NVIDIA Rubin Platform: Six New Chips, One AI Supercomputer | NVIDIA Technical Blog