Sanas is a Palo Alto-based startup that provides real-time speech understanding platform powered by AI technology.

Sanas’s solution operates on a speech-to-speech neural network architecture. This framework processes incoming speech in real-time, converting it to match a specified accent while retaining the speaker’s unique voice characteristics. The system is designed to work seamlessly across various accents and backgrounds, facilitating clearer communication in diverse settings

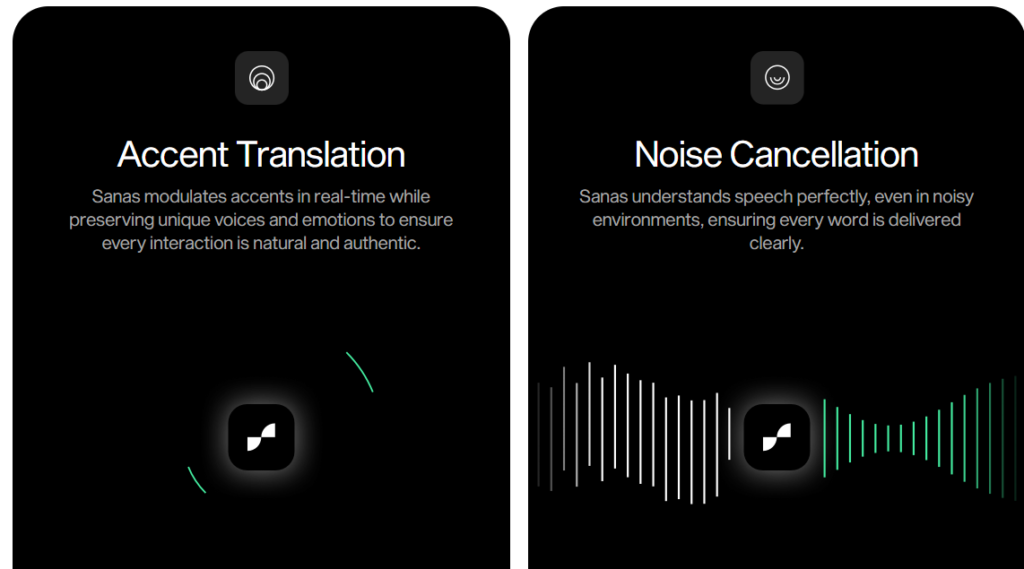

Sanas delivers crystal clear communication with real-time speech-to-speech AI technology.

Speech Intelligibility: Utilizing advanced neural networks, Sanas generates speech that emphasizes clarity and articulation, ensuring that the output is easily understood by listeners.

Speech Naturalness: The technology captures subtle nuances of human speech, such as tone and emphasis, to deliver output that maintains the warmth and authenticity of the original speaker.

Phoneme-Based Neural Networks: Sanas employs advanced neural networks that utilize a phoneme-based approach. This allows the AI to capture subtle nuances in speech, ensuring that the output retains the original speaker’s warmth and natural delivery.

Primarily utilized in contact centers, Sanas’s technology aims to improve customer-agent interactions by reducing misunderstandings and communication barriers. This enhancement leads to increased customer satisfaction, higher first-call resolution rates, and reduced average handling times. By minimizing the need for extensive accent training, organizations can also achieve operational cost savings.

Low Latency Processing: The system operates with approximately 200 milliseconds of latency, enabling seamless and uninterrupted conversations.

Phoneme-Based Neural Networks (PBNNs) are AI models designed to process and manipulate speech at the phoneme level. A phoneme is the smallest unit of sound in a language that can distinguish one word from another (e.g., the sounds /p/ and /b/ in “pat” and “bat”).

How Phoneme-Based Neural Networks Work

Phoneme Recognition:

The AI breaks down spoken words into individual phonemes using Automatic Speech Recognition (ASR).

Feature Extraction:

The system extracts acoustic features like pitch, tone, and duration to understand how the phonemes are spoken.

Neural Network Processing:

A deep learning model, often based on Recurrent Neural Networks (RNNs), Transformers, or Convolutional Neural Networks (CNNs), processes these phonemes and learns their patterns in different accents.

Phoneme Mapping & Transformation:

The AI maps phonemes from one accent to another while preserving the speaker’s unique vocal characteristics.

Speech Reconstruction:

The modified phoneme sequence is synthesized back into speech using Text-to-Speech (TTS) or waveform synthesis techniques like WaveNet or Tacotron.