Sensory Inc.: On-Device Voice, Sound, and Biometric AI at the Edge

Sensory Inc. is a Silicon Valley-based pioneer in embedded, on-device artificial intelligence for voice and sound applications. Founded in 1994, the company has spent more than 30 years innovating speech and biometrics technologies that run efficiently on low-power processors without relying on cloud connectivity. Sensory’s AI stacks power over 3 billion devices worldwide, emphasizing privacy, responsiveness, and energy efficiency in consumer electronics, automotive systems, wearables, IoT, and more.

Why On-Device AI Matters

Sensory’s core philosophy centers on edge computing — running AI locally on the device rather than in the cloud. This approach has three major advantages:

- Low Latency: Immediate voice recognition and response, even without network connectivity.

- Privacy by Design: Speech and biometric data never leave the device, reducing risk of interception or misuse.

- Energy Efficiency: Hardware-optimized algorithms consume minimal power, suitable for embedded systems and battery-limited devices.

Sensory targets platforms ranging from small microcontrollers (MCUs) and digital signal processors (DSPs) to System on Chips (SoCs) in cars, phones, and smart home gadgets.

Core Technologies

Sensory’s technologies are usually packaged as modular components and can be combined depending on the product requirement. The most relevant to speech and audio interfaces include:

- Wake Word & Speech Recognition

- Sound ID

- Voice Biometrics and Multi-Modal Biometrics

- Speech-to-Text & Command Understanding

1. Speech Recognition and Wake Words

On-Device Speech Recognition (TrulyNatural™)

Sensory’s embedded speech recognition engine — marketed as TrulyNatural™ — delivers full speech-to-text and command recognition directly on the device. It’s designed to:

- Run on low-power processors without cloud dependencies.

- Recognize natural language and map spoken input to actions or commands.

- Maintain robust accuracy even in noisy environments, crucial for real-world devices.

TrulyNatural is often used alongside wake-word detection to reduce unnecessary continuous processing — triggering the full speech engine only after a designated phrase is detected.

Wake Word Detection

Wake words (or hotwords) are brief, predefined phrases used to activate a device’s listening mode. Sensory provides:

- Pre-built wake words for quick integration.

- Tools to train custom wake words, including proprietary phrases unique to a brand or device.

- Low-power, noise-robust detectors that remain always-listening without significant power draw.

These capabilities support voice interfaces in mobile devices, televisions, vehicles, smart home products, and other always-available systems.

2. Sound ID: Audio Scene Awareness

While speech recognition focuses on human language, Sound ID extends Sensory’s technology to classify and interpret non-speech sounds — such as doorbells, alarms, pets, breaking glass, footsteps, or environmental noises.

Technically, Sound ID works by:

- Using deep learning and proprietary discriminating models to learn and differentiate characteristic sound patterns.

- Running entirely on device and in real time, facilitating immediate reactions without latency.

- Being hardware-agnostic and deployable across major consumer operating systems and embedded platforms.

Sound ID typically appears as part of Sensory’s larger AI suite — TrulySecure Speaker Verification (TSSV) — which combines wake words, voice biometrics, and sound classification.

Use cases include smart assistant trigger suppression when false positives occur, home safety alarms on IoT devices, and contextual awareness for user-centric interactions.

3. Voice Biometrics and Multi-Modal Verification

Speaker Verification: TrulySecure Speaker Verification (TSSV)

Sensory’s voice biometric system — often referred to as TSSV — enables devices to authenticate users by their voice. This allows personalized profiles, access security, and contextual behaviors based on who is speaking.

Key technical details include:

- Text-Dependent Voice Biometrics: Requires specific passphrases for verification.

- Text-Independent Voice Biometrics: Analyzes a speaker’s characteristics regardless of spoken content.

- Robust Feature Extraction: TSSV’s front-end processing includes advanced noise suppression and spectral/temporal modeling to handle real-world acoustic variations.

- Multi-User Support: The system adapts and improves accuracy over time as additional speech samples are provided.

Fusion with Face Biometrics

TSSV often pairs voice recognition with face biometric verification for enhanced security, termed “TrulySecure mode.” Devices can require both modalities or offer them in a convenience mode, depending on desired assurance levels.

Both face and voice processing are performed locally and use encrypted templates rather than storing raw biometric data, improving privacy and security.

4. Developer Tools and Customization

VoiceHub Portal

Sensory offers an online portal called VoiceHub, allowing developers to build and customize:

- Wake words

- Speech recognition models

- Grammar structures and intent handlers

VoiceHub simplifies generation of tailored models without deep AI expertise, accelerating product development for embedded voice systems.

Technical Benefits and Industry Impact

Sensory’s technology suite is engineered to balance accuracy, efficiency, and privacy, enabling features that were once the domain of cloud-based systems to thrive on device:

- High Accuracy in Noisy Environments: Robust training and algorithmic design ensure dependable performance even with ambient noise.

- Low Power Consumption: Optimized for MCUs, DSPs, and mobile SoCs, making it suitable for wearable and battery-powered devices.

- Privacy-Preserving Architecture: On-device processing means personal data never leaves the product.

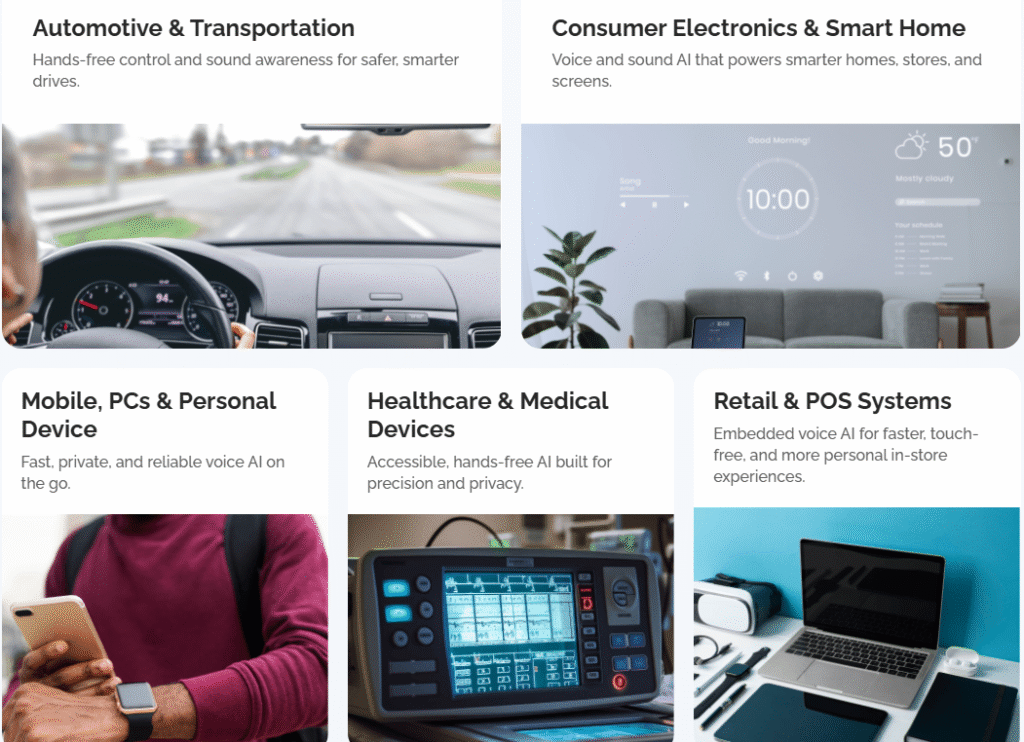

Sensory’s solutions are used across numerous industries including automotive hands-free systems, smart home assistants, consumer electronics, medical devices, and point-of-sale terminals.

Conclusion

Sensory Inc. represents a comprehensive approach to embedded voice and sound AI, combining decades of research with real-world deployments. Its technologies — spanning wake words, speech recognition, Sound ID, and biometrics — emphasize on-device intelligence, addressing key challenges in privacy, latency, and power consumption faced by traditional cloud-dependent systems.

By offering a portfolio that’s both flexible and scalable, Sensory continues to influence how devices listen, understand, and respond in increasingly human-centric ways.

Sensory’s on-device AI models compare with cloud-based ASR systems and modern transformer-based recognizers

Architectural Philosophy

Sensory (On-Device / Edge AI)

Sensory’s models are designed for embedded execution on MCUs, DSPs, and low-power SoCs.

Key architectural traits

- Compact acoustic and classification models

- Optimized DSP pipelines (FFT, MFCC, PLP variants)

- Fixed-point or mixed-precision inference

- Event-driven execution (wake word → ASR → intent)

The design assumes:

- No persistent network

- Hard memory and power limits

- Real-time constraints

Cloud-Based ASR (Google, Amazon, Microsoft, etc.)

Cloud ASR systems prioritize accuracy and scale over footprint.

Key architectural traits

- Large DNNs or transformer stacks

- Centralized inference on GPUs/TPUs

- Continuous streaming audio to cloud

- Language models updated continuously

The design assumes:

- Reliable network connectivity

- High compute availability

- Centralized data aggregation

Transformer-Based ASR (Whisper, Conformer, wav2vec 2.0, etc.)

Transformers emphasize sequence modeling and context retention.

Key architectural traits

- Self-attention layers (quadratic complexity)

- Large parameter counts (10M → billions)

- Pretrained on massive multilingual datasets

- Typically cloud or high-end edge (phones, PCs)

2. Model Size & Computational Footprint

| Dimension | Sensory On-Device | Cloud ASR | Transformer-Based ASR |

|---|---|---|---|

| Typical model size | 100 KB – few MB | 100s of MB – GBs | 10 MB – multiple GB |

| Compute target | MCU / DSP | GPU / TPU | GPU / NPU / high-end CPU |

| Power consumption | milliwatts | Irrelevant to device | watts (edge), kilowatts (cloud) |

| Always-on capable | Yes | No | Rarely |

Key difference:

Sensory trades model breadth for deterministic, low-power inference.

3. Latency & Responsiveness

Sensory

- Inference occurs locally

- Wake word latency: <100 ms

- Command recognition: near-instant

- Works offline

This makes Sensory ideal for:

- Automotive voice controls

- Wearables

- Medical devices

- Safety-critical systems

Cloud ASR

Latency includes:

- Audio capture

- Network transmission

- Queueing & inference

- Response transmission

Even optimized systems experience:

- 300 ms – several seconds

- Total failure when offline

Transformers

- Excellent transcription quality

- Latency grows with:

- Input length

- Attention window size

- Often unsuitable for always-listening scenarios

4. Accuracy vs Domain Specialization

Sensory

Sensory models are domain-specific, not general conversational engines.

Strengths

- Very high accuracy for:

- Wake words

- Command grammars

- Known intents

- Speaker verification

- Robust in noise due to engineered front-ends

Limitations

- Not designed for open-ended dictation

- Smaller vocabulary than cloud LLM-powered ASR

Cloud ASR

Strengths

- Best-in-class general transcription

- Large vocabulary & multilingual support

- Contextual language understanding

Limitations

- Accuracy degrades in:

- Packet loss

- High noise before transmission

- Privacy-restricted environments

Transformer-Based ASR

Strengths

- Superior long-range context modeling

- Handles accents and code-switching well

- State-of-the-art WER in benchmarks

Limitations

- Resource-intensive

- Overkill for command-and-control use cases

5. Sound ID vs General Audio Classification

Sensory Sound ID

Sensory’s Sound ID models are event classifiers, not general audio embeddings.

- Optimized CNN / DNN architectures

- Small feature vectors

- Deterministic inference

- Designed for triggering actions, not labeling datasets

Examples:

- Glass break → alarm

- Baby cry → notification

- Door knock → wake system

Transformer Audio Models

- Use embeddings (e.g., wav2vec, HuBERT)

- Require downstream classifiers

- High flexibility, high cost

- Typically cloud-hosted

Key difference:

Sensory Sound ID prioritizes reaction speed and efficiency, not analytical richness.

6. Voice Biometrics: Sensory vs Modern Approaches

Sensory Voice Biometrics (TSSV)

- Text-dependent and text-independent modes

- Feature-based speaker modeling

- Secure template storage on device

- Adaptive learning with limited samples

Optimized for:

- Embedded authentication

- Multi-user household devices

- Automotive personalization

Cloud / Transformer-Based Speaker ID

- Often use x-vectors or ECAPA-TDNNs

- Larger embeddings

- Centralized model updates

- Higher accuracy at scale

Trade-off

- Sensory: privacy + immediacy

- Cloud: scale + statistical robustness

7. Privacy, Security & Compliance

Sensory

- Audio never leaves device

- No raw voice storage

- Encrypted biometric templates

- Easier compliance with:

- GDPR

- HIPAA

- Medical and automotive regulations

Cloud / Transformer ASR

- Audio is transmitted and processed remotely

- Requires:

- Consent management

- Data retention policies

- Breach risk mitigation

8. When Sensory Beats Transformers (and When It Doesn’t)

Sensory is superior when:

- Device must be always listening

- Power budget is extremely limited

- Network is unreliable or forbidden

- Commands and intents are known

- Privacy is a hard requirement

Transformers are superior when:

- Open-ended dictation is needed

- Long conversational context matters

- Compute and bandwidth are available

- Language flexibility outweighs cost

9. Hybrid Architectures (Common in Practice)

Many modern systems combine both:

Typical hybrid flow

- Sensory wake word (on-device)

- Sensory speaker verification (on-device)

- Sensory command handling (on-device)

- Optional escalation to cloud / transformer ASR for:

- Dictation

- Complex queries

- LLM-driven responses

This preserves:

- Battery life

- Privacy

- Responsiveness

while still enabling advanced capabilities.

Sensory’s AI models a different optimization frontier:

Deterministic, privacy-preserving, ultra-low-power intelligence at the edge

Sensory | High-Accuracy, Low-Power On-Device AI for Voice, Sound & Biometrics