Diffusion Policy, a new way of generating robot behavior by representing a robot’s visuomotor policy as a conditional denoising diffusion process.

Team benchmarked ‘Diffusion Policy’ across 12 different tasks from 4 different robot manipulation benchmarks and find that it consistently outperforms existing state-of-the-art robot learning methods with an average improvement of 46.9%. Diffusion Policy learns the gradient of the action-distribution score function and iteratively optimizes with respect to this gradient field during inference via a series of stochastic Langevin dynamics steps.

The diffusion formulation yields powerful advantages when used for robot policies, including gracefully handling multimodal action distributions, being suitable for high-dimensional action spaces, and exhibiting impressive training stability.

To fully unlock the potential of diffusion models for visuomotor policy learning on physical robots, this paper presents a set of key technical contributions including the incorporation of receding horizon control, visual conditioning, and the time-series diffusion transformer.

TRI researchers hope this work will help motivate a new generation of policy learning techniques that are able to leverage the powerful generative modeling capabilities of diffusion models.

Diffusion Policy

Visuomotor Policy Learning via Action Diffusion

This paper introduces Diffusion Policy, a new way of generating robot behavior by representing a robot’s visuomotor policy as a conditional denoising diffusion process. We benchmark Diffusion Policy across 12 different tasks from 4 different robot manipulation benchmarks and find that it consistently outperforms existing state-of-the-art robot learning methods with an average improvement of 46.9%. Diffusion Policy learns the gradient of the action-distribution score function and iteratively optimizes with respect to this gradient field during inference via a series of stochastic Langevin dynamics steps. We find that the diffusion formulation yields powerful advantages when used for robot policies, including gracefully handling multimodal action distributions, being suitable for high-dimensional action spaces, and exhibiting impressive training stability. To fully unlock the potential of diffusion models for visuomotor policy learning on physical robots, this paper presents a set of key technical contributions including the incorporation of receding horizon control, visual conditioning, and the time-series diffusion transformer. We hope this work will help motivate a new generation of policy learning techniques that are able to leverage the powerful generative modeling capabilities of diffusion models.

Highlights

Diffusion Policy predicts a sequence of action for receding-horizon control.

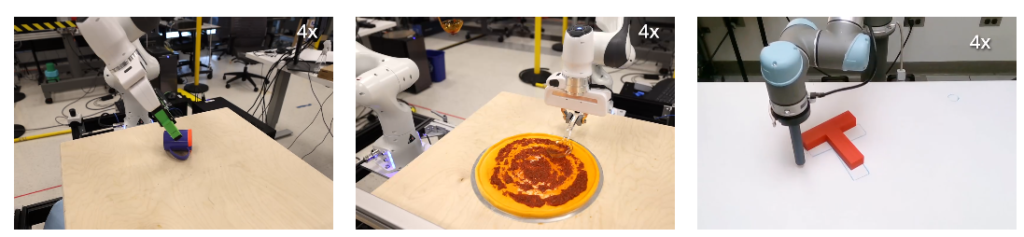

The Mug Flipping task requires the policy to predict smooth 6 DoF actions while operating close to kinetmatic limits.

Toward making 🍕: The sauce pouring and spreading task manipulates liquid with 6 DoF and periodic actions.

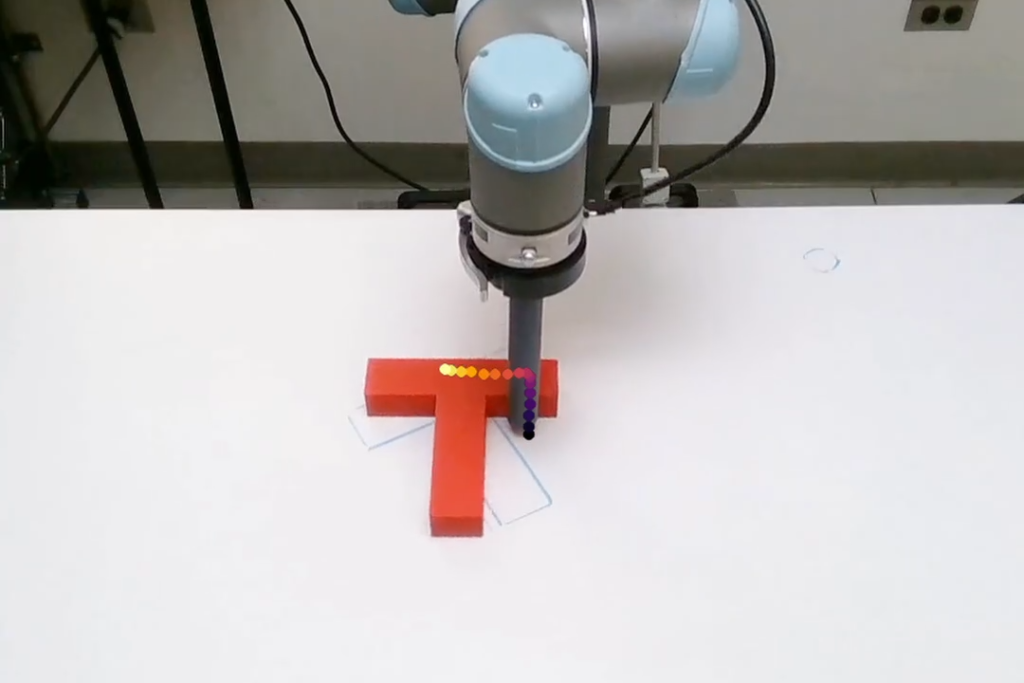

In our Push-T experiments, Diffusion Policy is highly robust against purturbations and visual distractions.

Diffusion Policy outperforms prior state-of-the-art on 12 tasks across 4 benchmarks with an average success-rate improvement of 46.9%. Check out our paper for further details!

Special shoutout to the authors of these projects for open-sourcing their simulation environments:

1 Robomimic 2 Implicit Behavior Cloning 3 Behavior Transformer 4 Relay Policy Learning

Paper

Latest version: arXiv:2303.04137 [cs.RO] or here.

Robotics: Science and Systems (RSS) 2023

https://www.tri.global/research/diffusion-policy-visuomotor-policy-learning-action-diffusion