The Ultra Mobile Vehicle (UMV) is a research robot from the Robotics and AI (RAI) Institute that looks at mobility in a new way: combine the efficiency and speed of wheels with the jumping and obstacle-clearing abilities usually reserved for legged robots. The result is a two-wheeled, self-balancing “robot bike” that drives, hops, flips, holds sustained wheelies, and performs dramatic precision balance moves like a track-stand — and it learns most of those behaviors through reinforcement learning rather than hand-coded routines.

The goal of the Ultra Mobility Vehicle (UMV) project at the RAI Institute is to first build a robot that combines these strengths of legs and wheels, and then use AI to unlock its physical capabilities. This research includes giving the robot cognitive intelligence and situational awareness, so it can reason effectively about its ability to move through the world. This world would include the type of terrain that trials cyclists negotiate, with large gaps and vertical steps up or down, rocks, hand rails, pedestals, downhill race courses, and the like.

This research helps us better understand robot control, state estimation, and perception. The UMV project team includes roboticists with expertise in mechanical engineering, electrical engineering, controls, machine learning, software, and perception

Why build a robot bike?

Wheeled systems are extremely efficient on flat ground, while legged robots excel at negotiating discontinuous and vertical terrain. The UMV project intentionally fuses both advantages: a bike-like lower chassis for rolling efficiency and a high-performance jumping mechanism that concentrates mass and actuation in an upper “head” to enable hops, flips, and other athletic moves. The research aim is not stunt videos alone — the team wants machines that understand their own physical capabilities and can reason about whether they can traverse a particular obstacle.

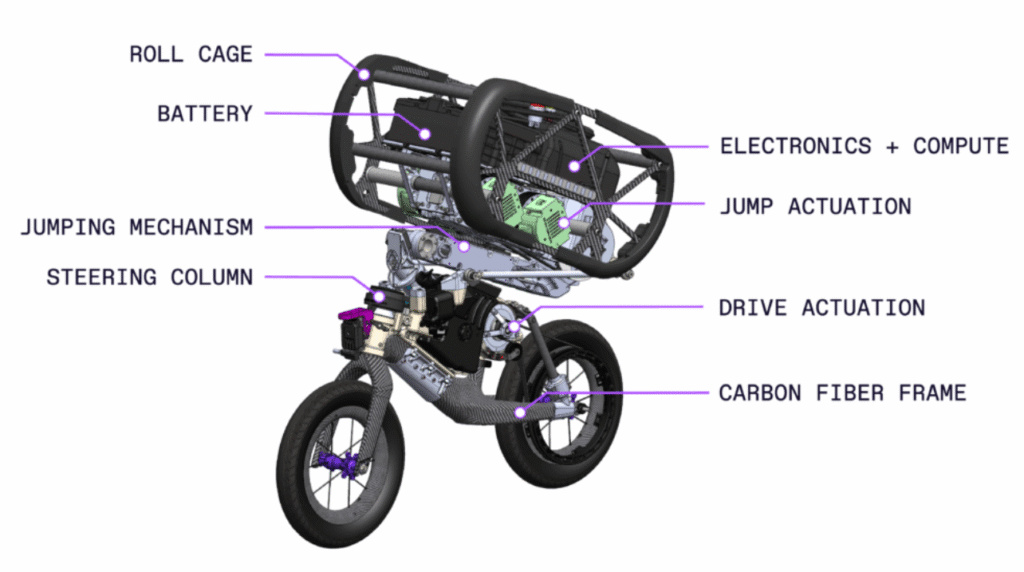

Mechanical design and hardware

- Form factor: The UMV uses a lightweight carbon-fiber bike frame as its lower half and a Z-shaped body that can extend and tuck — roughly ~80 cm tall when tucked and over 152 cm when extended. The design concentrates mass in the upper body (sprung mass) while minimizing unsprung mass near the wheels to make jumping and aerial maneuvers more effective. The full system weighs about 23 kg.

- Actuation: The lower half contains two motors: one for forward velocity and one for steering. The upper “head” contains a custom actuation system with four motors (used in pairs) dedicated to jumping and attitude control during airborne phases. The jumping mechanism is beefed up to survive high launch forces and hard landings.

- Power: UMV uses custom-tuned, safety-conscious high-power batteries designed to deliver bursts of energy for jumps without excessive mass penalty.

- Structure & protection: The robot uses a roll cage and a robust frame to survive crashes during development and testing. Visuals of the chassis show a protective outer lattice measuring and supporting the head and electronics.

Sensors and state estimation

UMV fuses multiple sensing modalities to drive, balance, and prepare for contact:

- Joint encoders on all actuated degrees of freedom for precise motor position/velocity feedback.

- Multiple IMUs (Inertial Measurement Units) for body acceleration, angular velocity, and gravity orientation; fused IMU readings allow contact detection and estimation of ground reaction forces.

- High-speed time-of-flight (ToF) sensors to measure height above ground and anticipate landings.

- LiDAR for local terrain mapping (point clouds) and obstacle awareness.

- Forward camera for tracking laboratory fiducials and assisting localization indoors.

These sensors let UMV estimate its state, detect contacts and impacts, and support perception-informed planning.

Control and learning stack

UMV’s remarkable behaviors come from combining classical controls with reinforcement learning (RL):

- Simulation-first RL training: The RAI team trains policies extensively in physics simulation (using NVIDIA’s Isaac Lab and a high-fidelity dynamics model) so that controllers can be learned through millions of trials without breaking hardware. Policies reward desired behaviors (stable drive, controlled jumps, safe landings) and penalize undesirable outcomes.

- Sim-to-real techniques: Because simulated physics never perfectly matches reality, UMV uses two standard sim-to-real strategies: (1) improving the fidelity of the robot’s model (mass properties, motor torque curves, wheel bounce) and (2) domain randomization — randomizing parameters like masses, motor constants and friction during training so learned policies generalize to physical variance. The team also runs empirical tests (e.g., wheel drop tests) to better model impacts.

- Hybrid deployment: New policies are tested cautiously on hardware — the workflow is iterative: train in sim, deploy on the robot while the team observes, then refine the reward or model to close the gap between sim and reality. Classical low-level controllers still regulate motor commands and safety constraints while learned policies output higher-level actions.

Capabilities demonstrated

In lab demonstrations the UMV has shown a suite of dynamic skills:

- Repeatedly jumping onto and off a ~1-meter table.

- Performing front flips and prolonged wheelies while driving.

- Hopping continuously on the rear wheel like a dynamically hopping robot.

- Executing a track-stand — coming to an abrupt, balanced stop on the wheels with zero forward speed.

- Performing combinations of tricks and precise maneuvers in a contained environment.

All of this is primarily enabled by policies learned via RL and validated on the real hardware. RAI Institute

Perception & future navigation plans

So far the UMV demonstrations have been in controlled indoor spaces (fiducials, LiDAR, ToF sensors). The RAI team’s roadmap includes integrating flexible, high-performance perception to identify affordances in unstructured environments — for example, recognizing ledges, gaps, rails, and footholds and reasoning about whether the robot’s current capabilities can overcome them. That cognitive layer — “athletic intelligence” — is the long-term objective.

Research significance and possible applications

UMV isn’t meant to replace existing wheeled or legged robots; it’s a research platform exploring a sweet spot between them. Potential long-term benefits include:

- Fast, efficient traversal of mixed terrains where wheels have advantages on runs and legs (or jumps) help clear obstacles.

- Inspection and search-and-rescue in environments with abrupt vertical changes where hopping to a ledge could dramatically reduce traversal time.

- Agriculture, maintenance, and logistics tasks where agility and speed together improve productivity.

But the immediate contribution is scientific: new approaches to robot design (mechanics + mass distribution), sensor fusion for contact-aware control, and RL workflows that close the sim-to-real gap for aggressive maneuvers.

UMV is a striking example of how modern robot design synthesizes mechanical ingenuity and machine learning. By concentrating mass, adding a jump-capable head, and training controllers in simulation with careful sim-to-real engineering, the RAI team built a nimble, bike-like robot that can do things most wheeled platforms only dream of. It’s a research platform with meaningful implications for agile autonomous mobility — and yes, it makes very entertaining demo videos.

Designing Wheeled Robotic Systems for Ultra Mobility and Performance | RAI Institute