Wi-Fi See It All (WiSIA) is an advanced Wi-Fi imaging system that leverages commercial off-the-shelf (COTS) Wi-Fi devices to detect and identify objects and humans, segment their boundaries, and recognize them within an image plane.

AI DensePose Approach:

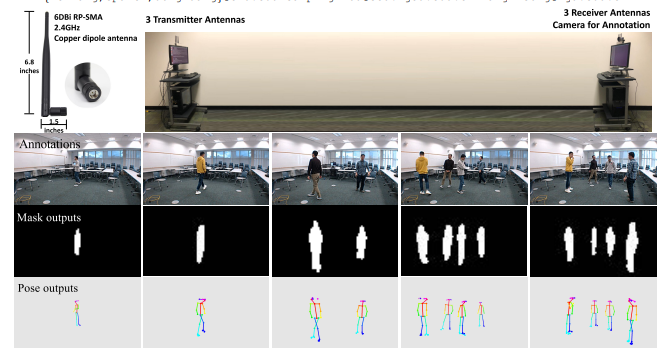

Researchers at Carnegie Mellon University have been testing a system which uses Wi-Fi signals to determine the position and pose of humans in a room. In the tests, ordinary Wi-Fi routers, specifically TP-Link Archer A7 AC1750 devices, were positioned at either end of the room, along with various numbers of people in the room. AI-powered algorithms analyzed the Wi-Fi signal interference generated by the people.

Advances in computer vision and machine learning techniques have led to significant development in 2D and 3D human pose estimation from RGB cameras, LiDAR, and radars. However, human pose estimation from images is adversely affected by occlusion and lighting, which are common in many scenarios of interest. Radar and LiDAR technologies, on the other hand, need specialized hardware that is expensive and power-intensive. Furthermore, placing these sensors in non-public areas raises significant privacy concerns.

To address these limitations this work expands on the use of the Wi-Fi signal in combination with deep learning architectures, commonly used in computer vision, to estimate dense human pose correspondence.

Team developed a deep neural network that maps the phase and amplitude of Wi-Fi signals to UV coordinates within 24 human regions.

The results of the study reveal that our model can estimate the dense pose of multiple subjects, with comparable visual performance to image-based approaches, by utilizing Wi-Fi signals as the only input. This paves the way for low-cost, broadly accessible, and privacy-preserving algorithms for human sensing.

The system employs a deep neural network that translates Wi-Fi signal characteristics into UV coordinates across 24 human regions, enabling the estimation of dense poses for multiple individuals. This method offers advantages over traditional camera-based systems, particularly in scenarios with poor lighting or occlusions, and addresses privacy concerns associated with visual surveillance.

Generative Adversarial Network (cGAN) Approach:

This system utilizes a conditional Generative Adversarial Network (cGAN) to enhance object boundaries, enabling effective imaging even in challenging conditions such as darkness or occlusion

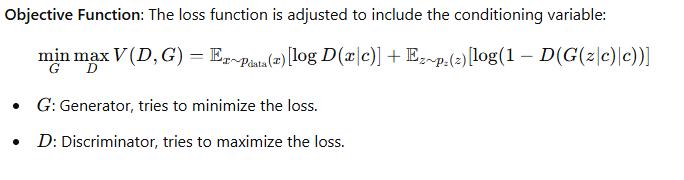

A conditional Generative Adversarial Network (cGAN) is a type of Generative Adversarial Network (GAN) where both the generator and discriminator are conditioned on additional information, such as labels, attributes, or data modalities. This conditioning enables the cGAN to generate outputs that are specific to the given input condition, making it particularly useful for tasks requiring controlled generation.

How cGAN Works

- Components:

- Generator: Tries to create data (e.g., images) conditioned on the input (e.g., labels or images) to fool the discriminator.

- Discriminator: Tries to distinguish between real data (from the dataset) and fake data (from the generator), also considering the conditioning input.

- Conditioning:

- The generator receives noise zzz (as in standard GANs) and a conditioning variable ccc.

- The discriminator also takes ccc as input to ensure it evaluates whether the generated data aligns with the condition.

Applications of cGAN

- Image-to-Image Translation:

- Example: Converting sketches to realistic images, turning day images into night, or translating grayscale images to color.

- Tools like Pix2Pix use cGANs for high-quality image-to-image tasks.

- Super-Resolution:

- Enhancing the resolution of an image conditioned on the low-resolution version.

- Data Augmentation:

- Generating synthetic data that follows the desired labels, useful in scenarios with limited data.

- Medical Imaging:

- Generating diagnostic images conditioned on specific disease markers or parameters.

- Wi-Fi Imaging:

- In systems like Wi-Fi See It All (WiSIA), cGANs enhance object boundaries and improve imaging under challenging conditions.

Advantages of cGAN

- Controlled Output: Ability to guide the generator to produce specific outputs.

- Improved Accuracy: By leveraging additional information, cGANs often yield better results than traditional GANs in context-specific tasks.

Versatile Imaging Capabilities:

WiSIA can simultaneously detect objects and humans, segment their boundaries, and identify them within the image plane.

Use of Commercial Wi-Fi Devices: The system is built upon COTS Wi-Fi devices, making it accessible and cost-effective.

Generative Adversarial Network Enhancement: Incorporating a cGAN allows WiSIA to enhance the boundaries of different objects, improving imaging accuracy.

Effective in Challenging Conditions: WiSIA outperforms state-of-the-art vision-based methods in dark and occluded scenarios, demonstrating its superiority in such challenging environments.

Person_in_WiFi__Fine_grained_Person_Perception_using_WiFi (4).pdf