ARKit 4 introduces a brand-new Depth API, creating a new way to access the detailed depth information gathered by the LiDAR Scanner on iPhone 12 Pro, iPhone 12 Pro Max, and iPad Pro. Location Anchoring leverages the higher-resolution data in Apple Maps to place AR experiences at a specific point in the world in your iPhone and iPad apps.* And face tracking is now supported on all devices with the Apple Neural Engine and a front-facing camera, so even more users can experience the joy of AR in photos and videos.

The M1 chip brings the Apple Neural Engine to the Mac, greatly accelerating machine learning (ML) tasks. Featuring Apple’s most advanced 16-core architecture capable of 11 trillion operations per second, the Neural Engine in M1 enables up to 15x faster machine learning performance.

The neural engine allows Apple to implement neural network and machine learning in a more energy-efficient manner than using either the main CPU or the GPU.

The A14 integrates an Apple-designed four-core GPU with 30% faster graphics performance than the A12. The A14 includes dedicated neural network hardware that Apple calls a new 16-core Neural Engine. The Neural Engine can perform 11 trillion operations per second.

blob:https://developer.apple.com/267e30fd-1634-4277-8f17-de7d6ee0c189

Depth API

The advanced scene understanding capabilities built into the LiDAR Scanner allow this API to use per-pixel depth information about the surrounding environment. When combined with the 3D mesh data generated by Scene Geometry, this depth information makes virtual object occlusion even more realistic by enabling instant placement of virtual objects and blending them seamlessly with their physical surroundings. This can drive new capabilities within your apps, like taking more precise measurements and applying effects to a user’s environment.

blob:https://developer.apple.com/48e4cab5-3467-440d-9579-40889f36f956

Location Anchors

Place AR experiences at specific places, such as throughout cities and alongside famous landmarks. Location Anchoring allows you to anchor your AR creations at specific latitude, longitude, and altitude coordinates. Users can move around virtual objects and see them from different perspectives, exactly as real objects are seen through a camera lens.

Expanded Face Tracking Support

Support for Face Tracking extends to the front-facing camera on any device with the A12 Bionic chip and later, including iPhone SE, so even more users can delight in AR experiences using the front-facing camera. Track up to three faces at once using the TrueDepth camera to power front-facing camera experiences like Memoji and Snapchat.

Scene Geometry

Create a topological map of your space with labels identifying floors, walls, ceilings, windows, doors, and seats. This deep understanding of the real world unlocks object occlusion and real-world physics for virtual objects, and also gives you more information to power your AR workflows.

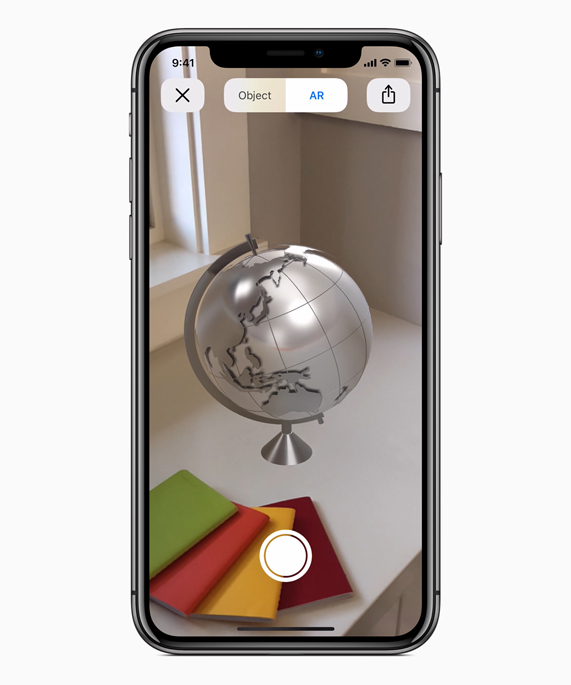

Instant AR

The LiDAR Scanner enables incredibly quick plane detection, allowing for the instant placement of AR objects in the real world without scanning. Instant AR placement is automatically enabled on iPhone 12 Pro, iPhone 12 Pro Max, and iPad Pro for all apps built with ARKit, without any code changes.

People Occlusion

AR content realistically passes behind and in front of people in the real world, making AR experiences more immersive while also enabling green screen-style effects in almost any environment. Depth estimation improves on iPhone 12, iPhone 12 Pro, and iPad Pro in all apps built with ARKit, without any code changes.

Motion Capture

Capture the motion of a person in real time with a single camera. By understanding body position and movement as a series of joints and bones, you can use motion and poses as an input to the AR experience — placing people at the center of AR. Height estimation improves on iPhone 12, iPhone 12 Pro, and iPad Pro in all apps built with ARKit, without any code changes.

Simultaneous Front and Back Camera

You can simultaneously use face and world tracking on the front and back cameras, opening up new possibilities. For example, users can interact with AR content in the back camera view using just their face.

Multiple Face Tracking

ARKit Face Tracking tracks up to three faces at once on all devices with the Apple Neural Engine and a front-facing camera to power AR experiences like Memoji and Snapchat.

Collaborative Sessions

With live collaborative session between multiple people, you can build a collaborative world map, making it faster for you to develop AR experiences and for users to get into shared AR experiences like multiplayer games.

Additional Improvements

Detect up to 100 images at a time and get an automatic estimate of the physical size of the object in the image. 3D object detection is more robust, as objects are better recognized in complex environments. And now, machine learning is used to detect planes in the environment even faster.