IPU is a parallel processor to accelerate machine intelligence inside the processor.

Graphcore’s “Intelligence processing unit” (IPU) aims to do for AI what the graphics processing unit did for computing.

Graphcore first chip, called the Colossus GC2, was a “16 nm massively parallel, mixed-precision floating point processor”,packaged with two chips on a single PCI Express card called the Graphcore C2 IPU, it is stated to perform the same role as a GPU in conjunction with standard machine learning frameworks such as TensorFlow.

The device relies on scratchpad memory for its performance rather than traditional cache hierarchies.

The GC200 is the world’s most complex processor made easy to use thanks to Poplar software, so innovators can make AI breakthroughs.

Graphcore second generation processor called GC200 built in TSMC’s 7nm FinFET manufacturing process. GC200 is a 59 billion transistor, 823 square millimeter integrated circuit with 1,472 computational cores and 900 Mbyte of local memories.

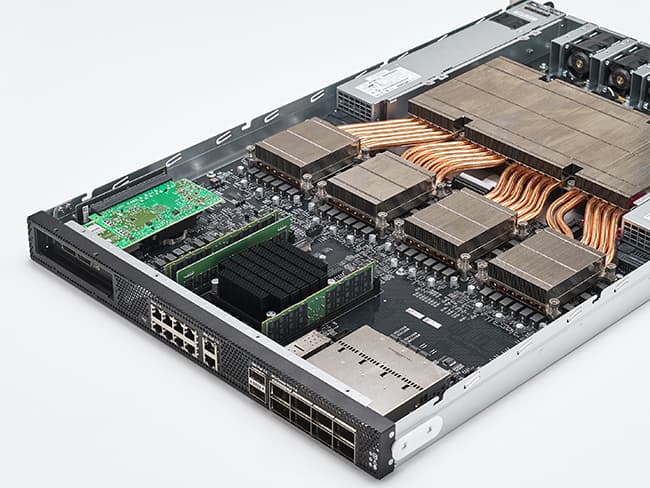

The IPU-Machine: IPU-M2000

The IPU-M2000 is our revolutionary next-generation system solution built with the Colossus MK2 IPU. It packs 1 PetaFlop of AI compute and up to 450GB Exchange-Memory™ in a slim 1U blade for the most demanding machine intelligence workloads.

The IPU-M2000 has a flexible, modular design, so you can start with one and scale to thousands. Directly connect a single system to an existing CPU server, add up to eight connected IPU-M2000s or with racks of 16 tightly interconnected IPU-M2000s in IPU-POD64 systems, grow to supercomputing scale thanks to the high-bandwidth, near-zero latency IPU-Fabric™ interconnect architecture built into the box.

The Graphcore IPU-POD64

IPU-POD64 is Graphcore’s unique solution for massive, disaggregated scale-out enabling high-performance machine intelligence compute to supercomputing scale. The IPU-POD64 builds upon the innovative IPU-M2000 and offers seamless scale-out up to 64,000 IPUs working as one integral whole or as independent subdivided partitions to handle multiple workloads and different users.

The IPU-POD64 has 16 IPU-M2000s in a standard rack. IPU-PODs communicate with near-zero latency using our unique IPU-Fabric™ interconnect architecture. IPU-Fabric has been specifically designed to eliminate communication bottlenecks and allow thousands of IPUs to operate on machine intelligence workloads as a single, high-performance and ultra-fast cohesive unit.

IPU-Server systems

Industry standard OEM server systems for training and inference, based on Graphcore dual-IPU C2 PCIe Cards with MK1 Colossus™ IPUs. Get started with development and experimentation then ramp to full scale production. Available on Microsoft Azure and to buy direct from Dell, Inspur and our partners.