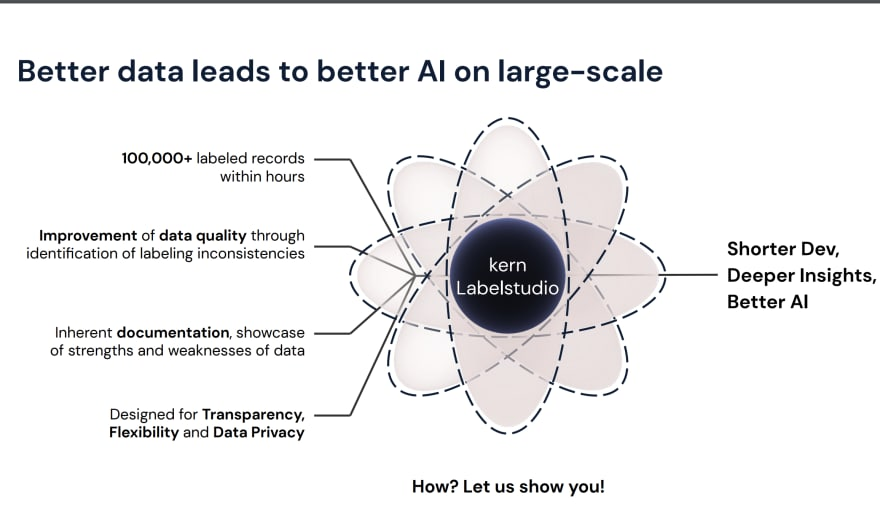

Kern AI is a German startup , which has built a platform for NLP developers and data scientists to not only control the labelling process, but automate and orchestrate tangential tasks and allow them to address low-quality data that comes their way.

Kern AI libraries and tools aim at improving the data-centric AI lifecycle.

Kern AI, a data-centric platform to build natural language interfaces. A no-code tool for machine learning so that business units could do machine learning themselves.

Kern AI provides the tools to improve data quality, from fixing label errors to enriching data by metadata.

It maintain and publish refinery, the data scientist’s open-source choice to scale, assess and maintain natural language data. Also, it maintains bricks, a collection of open-source modular NLP enrichments.

The goal of Kern.ai is to shorten the gap between ideas and reality in NLP, ultimately enabling reliable, controllable and powerful natural language interfaces.

Kern AI offers services in the field of natural language processing that enable a range of interaction modes between humans and machines. They manage natural language products, ETL pipelines, and workflows.

Whether it’s analyzing customer behavior, weather forecasting, or finding new chemical compounds, machine learning has established itself as a versatile tool for recognizing patterns and deriving insights from data to automate processes and make business decisions.

Yet, machine learning models can only be as good as your data, and you need to systematically change or enhance datasets to improve the performance of the model: this is the tenet of data-centric machine learning. Kern AI provides the tools to improve data quality, from fixing label errors to enriching data by metadata.

The goal in developing Refinery is to give companies control of their data and to help developers find subsets of mislabelled data that made the machine learning model decrease in performance.

It uses various approaches to identify mislabelled data. On the most basic level, it could be two people manually looking through the data: we check where they agree or disagree about the labels so that we get a human subjectivity benchmark. On a more sophisticated level, It uses different machine learning models, say some version of GPT with some active learner from HuggingFace, to get labels from an ensemble of models and see where they agree or disagree. By cleverly setting filters, for example, where models have high accuracy but low overlap in their labels, one can easily find bad, mislabeled data.

Kern.AI’s goal with Refinery is to spark the creativity of developers. One could, for example, not only perform label tasks but also give structured metadata to unstructured data, for instance, determine the sentence complexity of a corpus of text.

It allows embeddings, representing inputs – words, images, and others – as vectors, which gives structure to data. This is one of the major breakthroughs of deep learning: giving structure to unstructured data such as text.

By comparing how similar vectors are, for example, their overlap, one can find texts that are similar. Many real-world machine learning problems involve both supervised and unsupervised learning, where, for example, the labels are unknown. By using embeddings, one can identify topics, which can then be used as labels for a supervised learning process.

Talking about natural language processing, everyone is currently hyped about large language models like GPT, which has been around for a while but only recently got incredibly good.

‘HuggingFace’ ‘approach is to making models smaller again and depending on the use cases, for instance, classification tasks or a simple yes/no vote, models can become smaller: They get pre-trained by foundational models and then fine-tuned to perform a very specific task with very high accuracy, which also requires really high-quality data.

Last but not least, one missing piece is the integration of external knowledge sources. Machine learning models won’t replace databases, but they can give context. For example, you won’t ask ChatGPT about the order status of your purchase online, but if attached to a database with the order status, it can retrieve that knowledge and give context, such as an explanation of why the order is delayed.

refinery: the heart of the platform

This is the flagship of our NLP stack. refinery is both database and the application logic editor; it allows you to scale, assess and maintain your data. It automates the process of data cleaning and labeling, and shows you where improvements can be made. It also allows you to easily work together with inhouse or external annotators, and leverages the power of large language models to help you with your data.

bricks: the fuel of the platform

Our overall platform runs on plenty of natural language processing automations, which can be defined by the user. Now, the use cases our users face have different challenges, and require different automations. This is why we have implemented bricks: a collection of open-source and modular automations that can be stacked together, enabling users to customize the platform to their use cases with ease.

gates: enabling realtime processing

gates simply is the add-on to refinery, allowing it to process realtime data streams. With gates, you can use refinery to make predictions on data immediately, and use the results to make operational decisions.

workflow: the orchestrator of the platform

workflow is the glue of the platform, allowing you to define ETL-like pipelines that can understand natural language. For instance, you can use workflow to grab data from a 3rd party API, put it through refinery/gates, and then use the results in any further step of the pipeline.