Microsoft AI that can simulate your voice from just 3 seconds of audio

Microsoft’s – AI voice simulator capable of accurately immitating a person’s voice after listening to them speak for just three seconds.

The VALL-E language model was trained using 60,000 hours of English speech from 7,000 different speakers in order to synthesize “high-quality personalised speech” from any unseen speaker.

Once the artificial intelligence system has a person’s voice recording, it is able to make it sound like that person is saying anything. It is even able to imitate the original speaker’s emotional tone and acoustic environment.

https://arxiv.org/pdf/2301.02111.pdf

Microsoft trained VALL-E’s speech-synthesis capabilities on an audio library, assembled by Meta, called LibriLight. It contains 60,000 hours of English language speech from more than 7,000 speakers, mostly pulled from LibriVox public domain audiobooks. For VALL-E to generate a good result, the voice in the three-second sample must closely match a voice in the training data.

On the VALL-E example website, Microsoft provides dozens of audio examples of the AI model in action. Among the samples, the “Speaker Prompt” is the three-second audio provided to VALL-E that it must imitate. The “Ground Truth” is a pre-existing recording of that same speaker saying a particular phrase for comparison purposes (sort of like the “control” in the experiment). The “Baseline” is an example of synthesis provided by a conventional text-to-speech synthesis method, and the “VALL-E” sample is the output from the VALL-E model.

“Experiment results show that VALL-E significantly outperforms the state-of-the-art zero-shot text to speech synthesis (TTS) system in terms of speech naturalness and speaker similarity,” a paper describing the system stated.

“In addition, we find VALL-E could preserve the speaker’s emotion and acoustic environment of the acoustic prompt in synthesis.

Potential applications include authors reading entire audiobooks from just a sample recording, videos with natural language voiceovers, and filling in speech for a film actor if the original recording was corrupted.

As with other deepfake technology that imitates a person’s visual likeness in videos, there is the potential for misuse.

The VALL-E software used to generate the fake speech is currently not available for public use, with Microsoft citing “potential risks in misuse of the medel, such as spoofing voice identification or impersonating a specific speaker”.

Microsoft said it would also abide by its Responsible AI Principles as it continues to develop VALL-E, as well as consider possible ways to detect synthesized speech in order to mitigate such risks.

Microsoft trained VALL-E using voice recordings in the public domain, mostly from LibriVox audiobooks, while the speakers who were imitated took part in the experiments willingly.

“When the model is generalised to unseen speakers, relevant components should be accompanies by speech editing models, including the protocol to ensure that the speaker agrees to execute the modification and the system to detect the edited speech,” Microsoft researchers said in an ethics statement.

While using VALL-E to generate those results, the researchers only fed the three-second “Speaker Prompt” sample and a text string (what they wanted the voice to say) into VALL-E. So compare the “Ground Truth” sample to the “VALL-E” sample. In some cases, the two samples are very close. Some VALL-E results seem computer-generated, but others could potentially be mistaken for a human’s speech, which is the goal of the model.

In addition to preserving a speaker’s vocal timbre and emotional tone, VALL-E can also imitate the “acoustic environment” of the sample audio. For example, if the sample came from a telephone call, the audio output will simulate the acoustic and frequency properties of a telephone call in its synthesized output (that’s a fancy way of saying it will sound like a telephone call, too). And Microsoft’s samples (in the “Synthesis of Diversity” section) demonstrate that VALL-E can generate variations in voice tone by changing the random seed used in the generation process.

Perhaps owing to VALL-E’s ability to potentially fuel mischief and deception, Microsoft has not provided VALL-E code for others to experiment with, so we could not test VALL-E’s capabilities. The researchers seem aware of the potential social harm that this technology could bring. For the paper’s conclusion, they write:

“Since VALL-E could synthesize speech that maintains speaker identity, it may carry potential risks in misuse of the model, such as spoofing voice identification or impersonating a specific speaker. To mitigate such risks, it is possible to build a detection model to discriminate whether an audio clip was synthesized by VALL-E. We will also put Microsoft AI Principles into practice when further developing the models.”

In an experiment detailed in a paper (Cornell University), VALL-E was tested and led to favorable results.

https://arxiv.org/abs/2301.02111

Neural Codec Language Models are Zero-Shot Text to Speech Synthesizers

Chengyi Wang, Sanyuan Chen, Yu Wu, Ziqiang Zhang, Long Zhou, Shujie Liu, Zhuo Chen, Yanqing Liu, Huaming Wang, Jinyu Li, Lei He, Sheng Zhao, Furu Wei

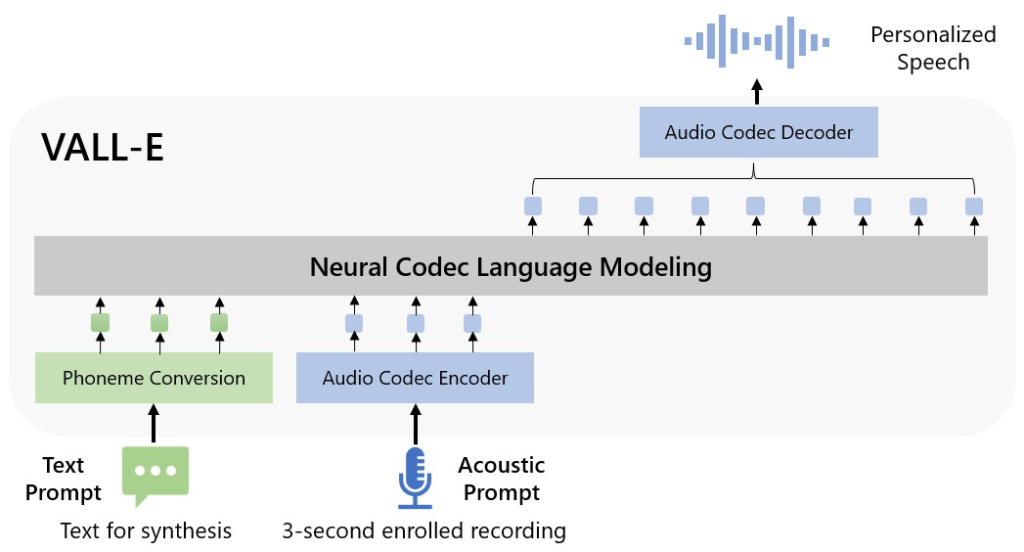

We introduce a language modeling approach for text to speech synthesis (TTS). Specifically, we train a neural codec language model (called Vall-E) using discrete codes derived from an off-the-shelf neural audio codec model, and regard TTS as a conditional language modeling task rather than continuous signal regression as in previous work. During the pre-training stage, we scale up the TTS training data to 60K hours of English speech which is hundreds of times larger than existing systems. Vall-E emerges in-context learning capabilities and can be used to synthesize high-quality personalized speech with only a 3-second enrolled recording of an unseen speaker as an acoustic prompt. Experiment results show that Vall-E significantly outperforms the state-of-the-art zero-shot TTS system in terms of speech naturalness and speaker similarity. In addition, we find Vall-E could preserve the speaker’s emotion and acoustic environment of the acoustic prompt in synthesis. See this https URL for demos of our work.