Researchers at University College London have developed software that lets users control a computer using voice, facial expressions, hand gestures, eye movements, and larger body motions.

The software, called MotionInput, was developed to enable touchless computing for a variety of purposes, from making computers easier to use for people with disabilities, to aiding professionals who have their hands full, like surgeons.

The software, which is now onto the third version, is available to download for free for non-commercial purposes. It’s currently only supported by Windows though there are plans to extend support to Linux, MacOS, and Android.

MotionInput can be customized to translate a variety of movements and vocal commands into mouse, keyboard and joystick signals. This means users can use anything from their nose to their hands to browse the internet, compose a document, or play a game.

Researchers at University College London have developed software that lets users control a computer using voice, facial expressions, hand gestures, eye movements, and larger body motions. All that’s required is a regular webcam — no special hardware needed.…

MotionInput v3

This software is still in development. It has been made available for Community and Feedback purposes and is free for non-commercial use. MotionInput is a gestures and speech based recognition layer for interacting with operating systems, applications and games via a webcam.3

The software, called MotionInput, was developed to enable touchless computing for a variety of purposes, from making computers easier to use for people with disabilities, to aiding professionals who have their hands full, like surgeons.

The software, which is now onto the third version, is available to download for free for non-commercial purposes. It’s currently only supported by Windows though there are plans to extend support to Linux, MacOS, and Android.

MotionInput can be customized to translate a variety of movements and vocal commands into mouse, keyboard and joystick signals. This means users can use anything from their nose to their hands to browse the internet, compose a document, or play a game.

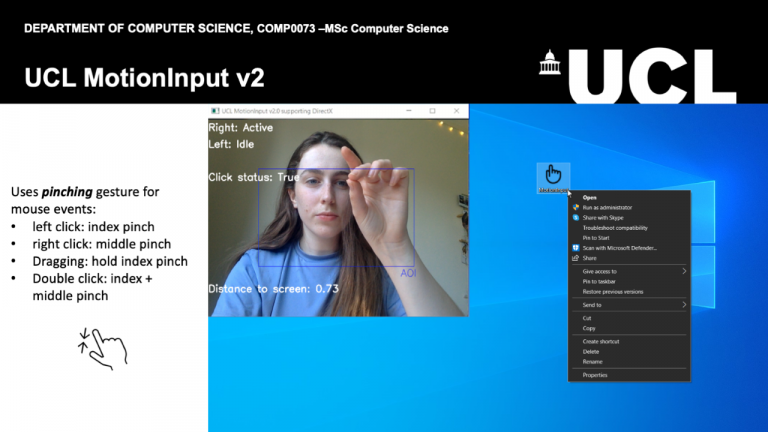

Recent advancements in gesture recognition technology, computer vision and machine learning open up a world of new opportunities for touchless computing interactions. UCL’s MotionInput supporting DirectX v2.0 is the second iteration of our Windows based library. This uses several open-source and federated on-device machine learning models, meaning that it is privacy safe in recognizing users. It captures and analyses interactions and converts them into mouse and keyboard signals for the operating system to make use of in its native user interface. This enables the full use of a user interface through hand gestures, body poses, head movement, and eye tracking to manipulate a computer, with only a standard existing webcam.

Touchless technologies allow users to interact with and control computer interfaces without any form of physical input, and instead by using gesture and voice commands. As part of this, gesture recognition technologies interpret human movements, gestures and behaviours through computer vision and machine learning. However, traditionally these gesture control technologies used dedicated depth cameras such as the Kinect camera and/or highly closed development platforms such as EyeToy. As such they remain largely unexplored for general public use outside of the video gaming industry.

By using a different approach, one that uses a webcam and open-source machine learning libraries, MotionInput is able to identify classifications of human activity and convey it as fast as possible as inputs to your existing software, including your browser, existing games and applications.

MotionInput supporting DirectX holds the potential to transform how we navigate computer user interfaces. For example, we can

play our existing computer games through exercise

create new art and performing music by subtle movements, including depth conversion

keep a sterile environment in using hospital systems with responsiveness to highly detailed user interfaces

interact with control panels in industry where safety is important

For accessibility purposes, this technology will allow for independent hands-free navigation of computer interfaces.

Coupled with advances to computer vision, we believe that the market reach of users with webcams could bring gesture recognition software into the mainstream in this new era of touchless computing.

Some of the Speech Commands currently available in English:

Say “click”, “double click” and “right click” for those mouse buttons.

In any editable text field, including in Word, Outlook, Teams etc; say “transcribe” and “stop transcribe” to start and stop transcription. Speak with short English sentences and pause, and it should appear.

In any browser or office app, “page up” and “page down”. “Cut”, “Copy”, “Paste”, “left arrow”, “right arrow” work as well.

In powerpoint you can say “next”, “previous”, “show fullscreen” and “escape”.

“Windows run” will bring up the Windowskey+R dialog box.

With the June 2022 3.01+ microbuild releases, you can say “hold left” to drag in a direction and hold the left mouse button. Say “release left” to let go.

“Minimize” minimizes the Window in focus.

“Maximize” maximizes the Window in focus.

“Maximize left” or “maximize right” maximizes the Window size in focus, at 50% of the screen on either side.

“Files” opens a file explorer window.

“Start menu” opens the Window menu and, when said a second time, it closes the menu.

“Screenshot” is another useful command to try along with “paste” into MS Paint.

MotionInput uses the following opensource libraries

MediaPipe

dlib C++

openVino

pynput

pydirectinput

Pywin32 api

OpenCV-python

numpy

ViGEmBus

vosk-api

tesseract

PyImageSearch

Nuitka

python-sounddevice

vgamepad

scipy

pandas

This software is still in development. It has been made available for Community and Feedback purposes and is free for non-commercial use. MotionInput is a gestures and speech based recognition layer for interacting with operating systems, applications and games via a webcam

https://students.cs.ucl.ac.uk/software/MotionInput/v2/MotionInput.html

Credit : https://www.ucl.ac.uk/